Everything Is Going to Kill Everybody (21 page)

Read Everything Is Going to Kill Everybody Online

Authors: Robert Brockway

Tags: #Technology & Engineering, #Sociology, #Humor, #Social Science, #Nature, #Science, #Disasters & Disaster Relief, #General, #Environmental, #Natural Disasters, #Ecology, #System failures (Engineering), #Hazardous substances, #Engineering (General), #Death & Dying

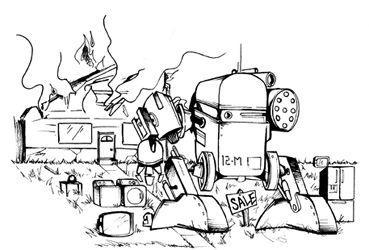

Heartwarming Moments in Robotics

A counter-role also developed alongside the cheater bots: The “hero bot.” Though much more rare than its villainous counterparts, the hero bots were robots that rolled into the poison sinks voluntarily, sacrificing themselves to warn the other robots of the danger. This is proof positive that we have seen either, the very first mechanical superhero or the very first tragically retarded robot.

The robots in question are little, flat, wheeled disks equipped with light sensors and programmed with about thirty “genetic strains” that determine their behavior. They were all given a simple task: Forage for food in an uncertain environment. “Food,” in this case (thankfully, they don’t take a cue from the EATR), just refers to battery-charging stations scattered around a small contained environment. The robots were set loose in an environment with both “safe” energy sources and “poison” battery sinks. It was thought that the machines might develop some rudimentary aspects of teamwork, but what the researchers found, instead, was a third ability—the aforementioned lying. After fifty generations or so, some robots evolved to “cheat” and would emit the signal to other robots that denoted a safe energy source when the source in question was actually poisonous. While the other robots rolled over to take the poison, the lying robot would wheel over to hoard the safe energy all for itself—effectively sending others off to die out of greed.

So now we know that not only are even the simplest robots capable of duplicity, but also of greed and murder—hey, thanks, Sweden!

Most of the evidence I’ve presented here indicates that robots may not necessarily be limited to their defined set of programmed characteristics. Of course, this is all in a book about intense fearmongering and creative swearing, so perhaps the viewpoint of this author should be taken with a grain of salt. Overarching fears about robotics—like the worry that they could jump their programming and go rogue—should really be taken only from a trustworthy authority source. Luckily, a report commissioned by the U.S. Navy’s Office of Naval Research and done by the Ethics and Emerging Technology department of California State Polytechnic University was instigated to study just that. Here’s what that report says:

There is a common misconception that robots will do only what we have programmed them to do. Unfortunately, such a belief is sorely outdated, harking back to a time when … programs could be written and understood by a single person.

That quote is lifted directly from the report presented to the Navy by Patrick Lin, chief compiler. What’s really worrying is that the report was prompted by a frightening incident in 2008 when an autonomous drone in the employ of the U.S. Army suffered a software malfunction that caused the robot to aim at exclusively friendly targets. Luckily a human triggerman was able to stop it before any fatalities occurred, but it scared the brass enough that they sponsored a massive, large-scale report to investigate it. The study is extremely thorough, but in a very simple nutshell, it states that the size and complexity of modern AI efforts basically make their code impossible to fully analyze for potential danger spots. Hundreds if not thousands of programmers write millions upon millions of lines of code for a single AI, and fully checking the safety of this code—verifying how the robots will react in every given situation—just isn’t possible. Luckily, Dr. Lin has a solution: He proposes the introduction of learning logic centers that will evolve over the course of a robot’s lifetime, teaching them the ethical nature of warfare through experience. As he puts it:

We are going to need a code. These things are military, and they can’t be pacifists, so we have to think in terms of battlefield ethics. We are going to need a warrior code.

Robots are going to have to learn abstract morality, according to Dr. Lin, and those lessons, like it or not, are going to start on the battlefield. The battlefield: the one single situation that emphasizes the gray area of human morality like nothing else. Military orders can often directly contradict your personal morality and, as a soldier, you’re often faced with a difficult decision between loyalty to your duty and loyalty to your own code of ethics. Human beings have struggled with this dilemma since the very inception of thought—a time when our largest act of warfare was throwing sticks at one another for pooping too close to the campfire. But now war is large scale, and robots are not going to be few and far between on the battlefield: Congress has mandated that nearly a third of all ground combat vehicles should be unmanned within five years. So to sum up, robots are going to get their lessons in Morality 101 in the intense and complicated realm of modern warfare, where they’re going to do their homework with machine guns and explosives.

Foundations of the Robot Warrior Code

Never kill an unarmed robot, unless it was built without arms

Protect the weak at all costs (they are easy meals).

Never turn your back on a fight (unless you have rocket launchers mounted there).

But hey, you know that old saying: “Why do we make mistakes? So we can learn from them.”

Some mistakes are just more rocket propelled than others.

20.

20.

ROBOT ABILITY

THE ROBOTS WOULD

have to be more effective fighters and hunters than we already are in order to do away with us, and that doesn’t just mean weapons. Anything can be equipped with nearly any weapon, and a robot with a chain saw is no more inherently deadly than a squirrel with a chain saw—it’s all in the ability to use it. It’s like they say:

Give a squirrel a chain saw, you run for a day. Teach a squirrel to chain saw, and you run forever. And we’re handing those metaphorical chain saws to those metaphorical squirrels like it’s National Trade Your Nuts for Blades Day.

Take, for example, the issue of maneuverability. As experts in avionics or fans of

Robocop

can tell you, agility and maneuverability are difficult concepts when you’re talking about solid steel instruments of destruction. The ED-209, that chicken-footed, robo-bastard villain from

Robocop

, was taken out by a simple stairwell, and planes are downed by disgruntled geese all the time. The latter is a phenomenon so common that there’s even a name for it: bird strike. And, apart from making a rather excellent title for an action movie (possibly a buddy-cop film starring Larry Byrd and his wacky new partner—a furious bear named Strike!), the bird-strike scenario is very emblematic of a major hurdle in modern mechanics: Inertia makes agility tough when you’re hurtling tons of steel at high speeds. But recently that problem has been solved by a machine called the MKV. If you’re taking notes, all previous scientists developing harmless-sounding names for your dangerous technology, the MKV is proof positive that comfort is not a requirement when titling new tech. “MKV” stands for, I swear to God, Multiple Kill Vehicle. Presumably the first in the soon to be classic Kill Vehicle line of products, the MKV recently passed a highly technical and extremely rigorous aerial agility test at the National Hover Test Facility (which is an entire facility dedicated to throwing things in the air and then determining whether they stay there). The MKV proved that it could maneuver with pinpoint accuracy at high speeds in three-dimensional space—moving vertically, horizontally, and diagonally at breakneck speeds—and it’s capable of doing this because it’s basically just a giant bundle of rockets pointing every which way that fire with immense force whenever a turn is required. Its intended purpose is to track and shoot down intercontinental ballistic projectiles using a single interceptor missile. To this end, it uses data from the Ballistic Missile Defense System to track incoming targets, in addition to its own seeker system. When a target is verified, the Multiple Kill Vehicle releases—I shit you not—a “cargo of Small Kill Vehicles” whose purpose is to “destroy all countermeasures.” So, this target-tracking, hypermaneuverable bundle of missiles first releases a gaggle of other, smaller tracking missiles,

just to shoot down your defenses

, before it will even fire its

actual

missiles at you. In summation, the MKV is a bunch of small missiles, strapped to a group of larger missiles, which in turn are attached to one giant master missile … with what basically amounts to an all-seeing eye mounted on it.

Well, it’s official: The government is taking its ideas directly from the Trapper Keeper sketches of twelve-year-old boys. Expect to be marveling at the next anticipated leap in military avionics: a Camaro jumping a skyscraper while on fire and surrounded by floating malformed boobs.

National Hover Test Facility Grading Criteria

Q:

Is object resting gently on the ground?

[] Yes. (Fail.)

[] No. (Pass!)

Oh, but in all this hot, missile-on-missile action, there’s something fundamental you may have missed about the MKV: That whole “target-tracking” thing. The procedure at the National Hover Test Facility demonstrated the MKV’s ability to “recognize and track a surrogate target in a flight environment.” It’s not just agility that’s being tested here, but also target tracking and independent recognition. And that’s a big deal: A key drawback in robotics so far has been recognition—it’s challenging to create a robot that can even self-navigate through a simple hallway, much less one that recognizes potential targets autonomously and tracks them (and by “them” I mean you) well enough to take them down (and by “take them down” I mean painfully explode).

These advancements in independent recognition are not just limited to high-tech military hardware, either, as you probably could have guessed. And as you can also probably guess, there is a cutesy candy shell covering the rich milk chocolate of horror below. Students at MIT have a robot named Nexi that is specifically designed to track, recognize, and respond to human faces. Infrared LEDs map the depth of field in front of the robot, and that depth information is then paired with the images from two stereo cameras. All three are combined to give the robot a full 3-D understanding of the human face, and in another sterling example of Unnecessary Additions, the students also gave Nexi the ability to be upset. If you walk too close, if you block its cameras, if you put your hand too near its face—Jesus, it gets pissed off at anything. God forbid you touch it; it’ll probably kill your dog.