Pro Oracle Database 11g Administration (18 page)

variable is defined in my environment:

$ echo $SQLPATH

/home/oracle/scripts

Create the login.sql script in the /home/oracle/scripts directory. It contains the following lines:

-- set SQL prompt

SET SQLPROMPT '&_USER.@&_CONNECT_IDENTIFIER.> '

Now, when I log in to SQL*Plus, my prompt is automatically set:

$ sqlplus / as sysdba

SYS@O11R2>

top.sql

The following script lists the top CPU-consuming SQL processes. It’s useful for identifying problem SQL

statements. Place this script in a directory such as HOME/scripts:

select * from(

select

sql_text

,buffer_gets

,disk_reads

,sorts

,cpu_time/1000000 cpu_sec

,executions

,rows_processed

66

CHAPTER 3 ■ CONFIGURING AN EFFICIENT ENVIRONMENT

from v$sqlstats

order by cpu_time DESC)

where rownum < 11;

Here’s how you execute this script:

SQL> @top

Here is a small snippet of the output, showing a SQL statement that is consuming a large amount of database resources:

SQL_TEXT

----------------------------------------------------------------------------

insert into reg_queue (registration_urn,registration_data,client_ip_addr, relay_

BUFFER_GETS DISK_READS SORTS CPU_SEC EXECUTIONS ROWS_PROCESSED

----------- ---------- ---------- ---------- ---------- --------------

6079221 2482 28309 986.704467 697494 997467

lock.sql

This script displays sessions that have locks on tables that are preventing other sessions from completing work. The script shows details about the blocking and waiting sessions. You should place this script in a directory such as HOME/scripts. Here are the contents of lock.sql: select s1.username blkg_user, s1.machine blkg_ws, s1.sid blkg_sid,

s2.username wait_user, s2.machine wait_ws, s2.sid wait_sid,

lo.object_id blkd_obj_id, do.owner, do.object_name

from v$lock l1, v$session s1, v$lock l2, v$session s2,

v$locked_object lo, dba_objects do

where s1.sid = l1.sid

and s2.sid = l2.sid

and l1.id1 = l2.id1

and s1.sid = lo.session_id

and lo.object_id = do.object_id

and l1.block = 1

and l2.request > 0;

The lock.sql script is useful for determining what session has a lock on an object and also for showing the blocked session. You can run this script from SQL*Plus as follows: SQL> @lock.sql

Here is a partial listing of the output (truncated so that it fits on one page): BLKG_USER BLKG_WS BLKG_SID WAIT_USER WAIT_WS

---------- -------------------- ---------- ---------- --------------------

MV ora03.regis.local 88 INV_APP ora03.regis.local users.sql

This script displays information about when users were created and whether their account is locked. The script is useful when you’re troubleshooting connectivity issues. Place it in a directory such as HOME/scripts. Here is a typical users.sql script to display user account information: 67

CHAPTER 3 ■ CONFIGURING AN EFFICIENT ENVIRONMENT

SELECT

username

,account_status

,lock_date

,created

FROM dba_users

ORDER BY username;

You can execute this script from SQL*Plus as follows:

SQL> @users.sql

Here is some sample output:

USERNAME ACCOUNT_STATUS LOCK_DATE CREATED

-------------------- -------------------------------- --------- ---------

CLUSTERUSER OPEN 09-MAY-10

DIP EXPIRED & LOCKED 09-MAY-10 09-MAY-10

DP122764 LOCKED 07-JAN-10 09-JUL-10

Organizing Scripts

When you have a set of scripts and utilities, you should organize them such that they’re consistently implemented for each database server. Follow these steps to implement the preceding DBA utilities for each database server in your environment:

1. Create operating system directories in which to store the scripts.

2. Copy your scripts and utilities to the directories created in step 1.

3. Configure your startup file to initialize the environment.

The previous steps are detailed in the following subsections.

Step 1: Create Directories

Create a standard set of directories on each database server to store your custom scripts. A directory beneath the HOME directory of the oracle user is usually a good location. I usually create the following three directories:

• HOME/bin. Standard location for shell scripts that are run in an automated fashion (such as from cron).

• HOME/bin/log. Standard location for log files generated from the scheduled shell scripts.

• HOME/scripts. Standard location to store SQL scripts.

You can use the mkdir command to create the previous directories as follows: $ mkdir -p $HOME/bin/log

$ mkdir $HOME/scripts

68

CHAPTER 3 ■ CONFIGURING AN EFFICIENT ENVIRONMENT

It doesn’t matter where you place the scripts or what you name the directories, as long as you have a standard location so that when you navigate from server to server, you always find the same files in the same locations. In other words, it doesn’t matter what the standard is, as long as you have a standard.

Step 2: Copy Files to Directories

Place your utilities and scripts in the appropriate directories. Copy the following files to the HOME/bin directory:

dba_setup

dba_fcns

tbsp_chk.bsh

conn.bsh

filesp.bsh

Place the following SQL scripts in the HOME/scripts directory:

login.sql

top.sql

lock.sql

users.sql

Step 3: Configure the Startup File

Place the following code in the .bashrc file or the equivalent startup file for the shell you use (.profile for the Korn shell). Here’s an example of how to configure the .bashrc file:

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

#

. /var/opt/oracle/oraset

#

. $HOME/bin/dba_setup

. $HOME/bin/dba_fcns

Now, each time you log in to an environment, you have full access to all the operating system variables, aliases, and functions established in the dba_setup and dba_fcns files. If you don’t want to log off and back on, then run the file manually using the . (dot) command. This command executes the lines contained within a file. The following example runs the .bashrc file: $ . $HOME/.bashrc

The . instructs the shell to

source

the script. Sourcing tells the shell process you’re currently logged on to, to inherit any variables set with an export command in an executed script. If you don’t use the .

notation, then the variables set within the script are visible only in the context of the subshell that is spawned when the script is executed.

69

CHAPTER 3 ■ CONFIGURING AN EFFICIENT ENVIRONMENT

■

Note

In the Bash shell, the source command is equivalent to the . (dot ) command.

Summary

This chapter describes how to configure an efficient environment. This is especially important for DBAs who manage multiple databases on multiple servers. Regular maintenance and troubleshooting activities require you to log on directly to the database server. To promote efficiency and sanity, you should develop a standard set of operating system tools and SQL scripts that help you maintain multiple environments. You can use standard features of the OS to assist with navigating, repeating commands, showing system bottlenecks, quickly finding critical files, and so on.

The techniques for configuring a standard OS are especially useful when you’re working on multiple servers with multiple databases. When you have multiple terminal sessions running simultaneously, it’s easy to lose your bearings and forget which session is associated with a particular server and database.

With just a small amount of setup, you can ensure that your OS prompt always shows information such as the host and database. Likewise, you can always set your SQL prompt to show the username and database connection. These techniques help ensure that you don’t accidentally run a command or script in the wrong environment.

After you have installed the Oracle binaries, created a database, configured your environment, you are ready to perform additional database administration tasks such as creating tablespaces for the applications. The topic of tablespace creation and maintenance is described in the next chapter.

70

CHAPTER 4 ■ TABLESPACES AND DATAFILES

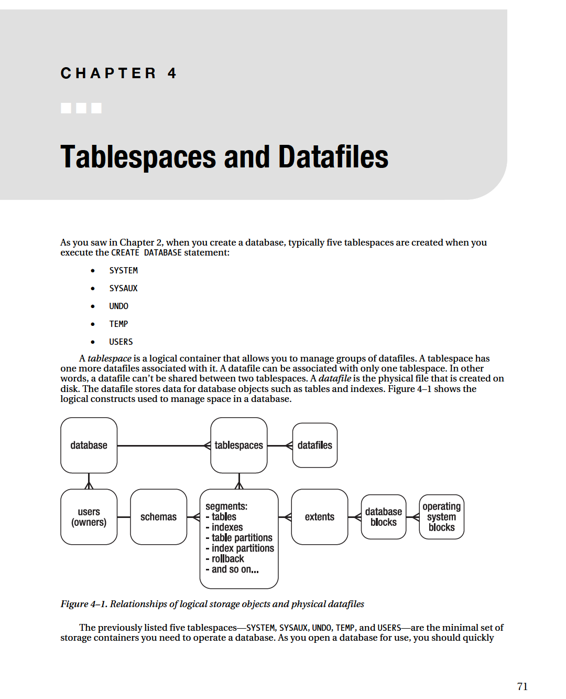

create additional tablespaces to store application data. This chapter discusses the purpose of the standard set of tablespaces, the need for additional tablespaces, and how to manage these critical database storage containers.

Understanding the First Five

The SYSTEM tablespace provides storage for the Oracle data-dictionary objects. This tablespace is where all objects owned by the SYS user are stored. The SYS user should be the only user that owns objects created in the SYSTEM tablespace.

Starting with Oracle Database 10

g

, the SYSAUX (system auxiliary) tablespace is created when you create the database. This is an auxiliary tablespace used as a data repository for Oracle database tools such as Enterprise Manager, Statspack, LogMiner, Logical Standby, and so on.

The UNDO tablespace stores the information required to roll back uncommitted data. This tablespace contains information about data as it existed before an insert, update, or delete statement (this is sometimes referred to as a

before image

copy of the data). This information is used to roll back uncommitted data in the event of a crash recovery and to provide read consistency for SQL statements.

Some Oracle SQL statements require a sort area, either in memory or on disk. For example, the results of a query may need to be sorted before being returned to the user. Oracle first uses memory to sort the query results; and when there isn’t sufficient room in memory, the TEMP tablespace is used as a sorting area on disk. When you create a database, typically you create the TEMP tablespace and specify it to be the default temporary tablespace for any users you create.

The USERS tablespace is often used as a default permanent tablespace for table and index data for users. As shown in Chapter 2, you can create a default permanent tablespace for users when you create the database.

Understanding the Need for More

Although you could put every database user’s data in the USERS tablespace, this usually isn’t scalable or maintainable for any type of serious database application. Instead, it’s more efficient to create additional tablespaces for application users. You typically create at least two tablespaces specific for each application using the database: one for the application table data and one for the application index data.

For example, for the APP user, you can create tablespaces named APP_DATA and APP_INDEX for table and index data, respectively.

DBAs used to separate table and index data for performance reasons. The thinking was that separating table data from index data would reduce I/O contention. This is because each tablespace and associated datafiles could be placed on different disks with separate controllers.

With modern storage configurations that have multiple layers of abstraction between the application and the underlying physical storage devices, it’s debatable whether you can realize any performance gains by creating multiple separate tablespaces. But there still are valid reasons for creating multiple tablespaces for table and index data:

• Backup and recovery requirements may be different for the tables and indexes.

• Indexes may have different storage requirements than the table data.

In addition to separate tablespaces for data and indexes, you sometimes create separate tablespaces for objects of different sizes. For example, if an application has very large tables, you can create an APP_DATA_LARGE tablespace that has a large extent size, and a separate APP_DATA_SMALL tablespace that has a smaller extent size.

Depending on your requirements, you should consider creating separate tablespaces for each application using the database. For example, for an inventory application, create INV_DATA and 72

CHAPTER 4 ■ TABLESPACES AND DATAFILES

INV_INDEX; and for an HR application, create HR_DATA and HR_INDEX. Here are some reasons to consider creating separate tablespaces for each application using the database:

• Applications may have different availability requirements. Separate tablespaces let you take tablespaces offline for one application while not affecting another application.

• Applications may have different backup and recovery requirements. Separate tablespaces let tablespaces be backed up and recovered independently.