Thinking, Fast and Slow (31 page)

Read Thinking, Fast and Slow Online

Authors: Daniel Kahneman

Bernoulli’s essay is a marvel of concise brilliance. He applied his new concept of expected utility (which he called “moral expectation”) to compute how much a merchant in St. Petersburg would be willing to pay to insure a shipment of spice from Amsterdam if “he is well aware of the fact that at this time of year of one hundred ships which sail from Amsterdam to Petersburg, five are usually lost.” His utility function explained why poor people buy insurance and why richer people sell it to them. As you can see in the table, the loss of 1 million causes a loss of 4 points of utility (from 100 to 96) to someone who has 10 million and a much larger loss of 18 points (from 48 to 30) to someone who starts off with 3 million. The poorer man will happily pay a premium to transfer the risk to the richer one, which is what insurance is about. Bernoulli also offered a solution to the famous “St. Petersburg paradox,” in which people who are offered a gamble that has infinite expected value (in ducats) are willing to spend only a few ducats for it. Most impressive, his analysis of risk attitudes in terms of preferences for wealth has stood the test of time: it is still current in economic analysis almost 300 years later.

The longevity of the theory is all the more remarkable because it is seriously flawed. The errors of a theory are rarely found in what it asserts explicitly; they hide in what it ignores or tacitly assumes. For an example, take the following scenarios:

Today Jack and Jill each have a wealth of 5 million.

Yesterday, Jack had 1 million and Jill had 9 million.

Are they equally happy? (Do they have the same utility?)

Bernoulli’s theory assumes that the utility of their wealth is what makes people more or less happy. Jack and Jill have the same wealth, and the theory therefore asserts that they should be equally happy, but you do not need a degree in psychology to know that today Jack is elated and Jill despondent. Indeed, we know that Jack would be a great deal happier than Jill even if he had only 2 million today while she has 5. So Bernoulli’s theory must be wrong.

The happiness that Jack and Jill experience is determined by the recent

change

in their wealth, relative to the different states of wealth that define their reference points (1 million for Jack, 9 million for Jill). This reference dependence is ubiquitous in sensation and perception. The same sound will be experienced as very loud or quite faint, depending on whether it was preceded by a whisper or by a roar. To predict the subjective experience of loudness, it is not enough to know its absolute energy; you also need to Bineli&r quite fa know the reference sound to which it is automatically compared. Similarly, you need to know about the background before you can predict whether a gray patch on a page will appear dark or light. And you need to know the reference before you can predict the utility of an amount of wealth.

For another example of what Bernoulli’s theory misses, consider Anthony and Betty:

Anthony’s current wealth is 1 million.

Betty’s current wealth is 4 million.

They are both offered a choice between a gamble and a sure thing.

The gamble: equal chances to end up owning 1 million or 4 million

OR

The sure thing: own 2 million for sure

In Bernoulli’s account, Anthony and Betty face the same choice: their expected wealth will be 2.5 million if they take the gamble and 2 million if they prefer the sure-thing option. Bernoulli would therefore expect Anthony and Betty to make the same choice, but this prediction is incorrect. Here again, the theory fails because it does not allow for the different

reference points

from which Anthony and Betty consider their options. If you imagine yourself in Anthony’s and Betty’s shoes, you will quickly see that current wealth matters a great deal. Here is how they may think:

Anthony (who currently owns 1 million): “If I choose the sure thing, my wealth will double with certainty. This is very attractive. Alternatively, I can take a gamble with equal chances to quadruple my wealth or to gain nothing.”

Betty (who currently owns 4 million): “If I choose the sure thing, I lose half of my wealth with certainty, which is awful. Alternatively, I can take a gamble with equal chances to lose three-quarters of my wealth or to lose nothing.”

You can sense that Anthony and Betty are likely to make different choices because the sure-thing option of owning 2 million makes Anthony happy and makes Betty miserable. Note also how the

sure

outcome differs from the

worst

outcome of the gamble: for Anthony, it is the difference between doubling his wealth and gaining nothing; for Betty, it is the difference between losing half her wealth and losing three-quarters of it. Betty is much more likely to take her chances, as others do when faced with very bad options. As I have told their story, neither Anthony nor Betty thinks in terms of states of wealth: Anthony thinks of gains and Betty thinks of losses. The psychological outcomes they assess are entirely different, although the possible states of wealth they face are the same.

Because Bernoulli’s model lacks the idea of a reference point, expected utility theory does not represent the obvious fact that the outcome that is good for Anthony is bad for Betty. His model could explain Anthony’s risk aversion, but it cannot explain Betty’s risk-seeking preference for the gamble, a behavior that is often observed in entrepreneurs and in generals when all their options are bad.

All this is rather obvious, isn’t it? One could easily imagine Bernoulli himself constructing similar examples and developing a more complex theory to accommodate them; for some reason, he did not. One could also imagine colleagues of his time disagreeing with him, or later scholars objecting as they read his essay; for some reason, they did not either.

The mystery is how a conception of the utility of outcomes that is vulnerable to such obvious counterexamples survived for so long. I can explain it only by a weakness of the scholarly mind that I have often observed in myself. I call it theory-induced blindness: once you have accepted a theory and used it as a tool in your thinking, it is extraordinarily difficult to notice its flaws. If you come upon an observation that does not seem to fit the model, you assume that there must be a perfectly good explanation that you are somehow missing. You give the theory the benefit of the doubt, trusting the community of experts who have accepted it. Many sch

olars have surely thought at one time or another of stories such as those of Anthony and Betty, or Jack and Jill, and casually noted that these stories did not jibe with utility theory. But they did not pursue the idea to the point of saying, “This theory is seriously wrong because it ignores the fact that utility depends on the history of one’s wealth, not only on present wealth.” As the psychologist Daniel Gilbert observed, disbelieving is hard work, and System 2 is easily tired.

Speaking of Bernoulli’s Errors

“He was very happy with a $20,000 bonus three years ago, but his salary has gone up by 20% since, so he will need a higher bonus to get the same utility.”

“Both candidates are willing to accept the salary we’re offering, but they won’t be equally satisfied because their reference points are different. She currently has a much higher salary.”

“She’s suing him for alimony. She would actually like to settle, but he prefers to go to court. That’s not surprising—she can only gain, so she’s risk averse. He, on the other hand, faces options that are all bad, so he’d rather take the risk.”

Amos and I stumbled on the central flaw in Bernoulli’s theory by a lucky combination of skill and ignorance. At Amos’s suggestion, I read a chapter in his book that described experiments in which distinguished scholars had measured the utility of money by asking people to make choices about gambles in which the participant could win or lose a few pennies. The experimenters were measuring the utility of wealth, by modifying wealth within a range of less than a dollar. This raised questions. Is it plausible to assume that people evaluate the gambles by tiny differences in wealth? How could one hope to learn about the psychophysics of wealth by studying reactions to gains and losses of pennies? Recent developments in psychophysical theory suggested that if you want to study the subjective value of wealth, you shou Clth"ld ask direct questions about wealth, not about changes of wealth. I did not know enough about utility theory to be blinded by respect for it, and I was puzzled.

When Amos and I met the next day, I reported my difficulties as a vague thought, not as a discovery. I fully expected him to set me straight and to explain why the experiment that had puzzled me made sense after all, but he did nothing of the kind—the relevance of the modern psychophysics was immediately obvious to him. He remembered that the economist Harry Markowitz, who would later earn the Nobel Prize for his work on finance, had proposed a theory in which utilities were attached to changes of wealth rather than to states of wealth. Markowitz’s idea had been around for a quarter of a century and had not attracted much attention, but we quickly concluded that this was the way to go, and that the theory we were planning to develop would define outcomes as gains and losses, not as states of wealth. Knowledge of perception and ignorance about decision theory both contributed to a large step forward in our research.

We soon knew that we had overcome a serious case of theory-induced blindness, because the idea we had rejected now seemed not only false but absurd. We were amused to realize that we were unable to assess our current wealth within tens of thousands of dollars. The idea of deriving attitudes to small changes from the utility of wealth now seemed indefensible. You know you have made a theoretical advance when you can no longer reconstruct why you failed for so long to see the obvious. Still, it took us years to explore the implications of thinking about outcomes as gains and losses.

In utility theory, the utility of a gain is assessed by comparing the utilities of two states of wealth. For example, the utility of getting an extra $500 when your wealth is $1 million is the difference between the utility of $1,000,500 and the utility of $1 million. And if you own the larger amount, the disutility of losing $500 is again the difference between the utilities of the two states of wealth. In this theory, the utilities of gains and losses are allowed to differ only in their sign (+ or –). There is no way to represent the fact that the disutility of losing $500 could be greater than the utility of winning the same amount—though of course it is. As might be expected in a situation of theory-induced blindness, possible differences between gains and losses were neither expected nor studied. The distinction between gains and losses was assumed not to matter, so there was no point in examining it.

Amos and I did not see immediately that our focus on changes of wealth opened the way to an exploration of a new topic. We were mainly concerned with differences between gambles with high or low probability of winning. One day, Amos made the casual suggestion, “How about losses?” and we quickly found that our familiar risk aversion was replaced by risk seeking when we switched our focus. Consider these two problems:

Problem 1: Which do you choose?

Get $900 for sure OR 90% chance to get $1,000

Problem 2: Which do you choose?

Lose $900 for sure OR 90% chance to lose $1,000

You were probably risk averse in problem 1, as is the great majority of people. The subjective value of a gain of $900 is certainly more than 90% of the value of a ga Blth"it ue of a gin of $1,000. The risk-averse choice in this problem would not have surprised Bernoulli.

Now examine your preference in problem 2. If you are like most other people, you chose the gamble in this question. The explanation for this risk-seeking choice is the mirror image of the explanation of risk aversion in problem 1: the (negative) value of losing $900 is much more than 90% of the (negative) value of losing $1,000. The sure loss is very aversive, and this drives you to take the risk. Later, we will see that the evaluations of the probabilities (90% versus 100%) also contributes to both risk aversion in problem 1 and the preference for the gamble in problem 2.

We were not the first to notice that people become risk seeking when all their options are bad, but theory-induced blindness had prevailed. Because the dominant theory did not provide a plausible way to accommodate different attitudes to risk for gains and losses, the fact that the attitudes differed had to be ignored. In contrast, our decision to view outcomes as gains and losses led us to focus precisely on this discrepancy. The observation of contrasting attitudes to risk with favorable and unfavorable prospects soon yielded a significant advance: we found a way to demonstrate the central error in Bernoulli’s model of choice. Have a look:

Problem 3: In addition to whatever you own, you have been given $1,000.

You are now asked to choose one of these options:

50% chance to win $1,000 OR get $500 for sure

Problem 4: In addition to whatever you own, you have been given $2,000.

You are now asked to choose one of these options:

50% chance to lose $1,000 OR lose $500 for sure

You can easily confirm that in terms of final states of wealth—all that matters for Bernoulli’s theory—problems 3 and 4 are identical. In both cases you have a choice between the same two options: you can have the certainty of being richer than you currently are by $1,500, or accept a gamble in which you have equal chances to be richer by $1,000 or by $2,000. In Bernoulli’s theory, therefore, the two problems should elicit similar preferences. Check your intuitions, and you will probably guess what other people did.

- In the first choice, a large majority of respondents preferred the sure thing.

- In the second choice, a large majority preferred the gamble.

The finding of different preferences in problems 3 and 4 was a decisive counterexample to the key idea of Bernoulli’s theory. If the utility of wealth is all that matters, then transparently equivalent statements of the same problem should yield identical choices. The comparison of the problems highlights the all-important role of the reference point from which the options are evaluated. The reference point is higher than current wealth by $1,000 in problem 3, by $2,000 in problem 4. Being richer by $1,500 is therefore a gain of $500 in problem 3 and a loss in problem 4. Obviously, other examples of the same kind are easy to generate. The story of Anthony and Betty had a similar structure.

How much attention did you pay to the gift of $1,000 or $2,000 that you were “given” prior to making your choice? If you are like most people, you barely noticed it. Indeed, there was no reason for you to attend to it, because the gift is included in the reference point, and reference points are generally ignored. You know something about your preferences that utility theorists do not—that your attitudes to risk would not be different if your net worth were higher or lower by a few thousand dollars (unless you are abjectly poor). And you also know that your attitudes to gains and losses are not derived from your evaluation of your wealth. The reason you like the idea of gaining $100 and dislike the idea of losing $100 is not that these amounts change your wealth. You just like winning and dislike losing—and you almost certainly dislike losing more than you like winning.

The four problems highlight the weakness of Bernoulli’s model. His theory is too simple and lacks a moving part. The missing variable is the

reference point

, the earlier state relative to which gains and losses are evaluated. In Bernoulli’s theory you need to know only the state of wealth to determine its utility, but in prospect theory you also need to know the reference state. Prospect theory is therefore more complex than utility theory. In science complexity is considered a cost, which must be justified by a sufficiently rich set of new and (preferably) interesting predictions of facts that the existing theory cannot explain. This was the challenge we had to meet.

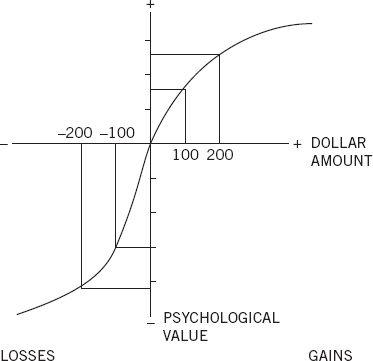

Although Amos and I were not working with the two-systems model of the mind, it’s clear now that there are three cognitive features at the heart of prospect theory. They play an essential role in the evaluation of financial outcomes and are common to many automatic processes of perception, judgment, and emotion. They should be seen as operating characteristics of System 1.

- Evaluation is relative to a neutral reference point, which is sometimes referred to as an “adaptation level.” You can easily set up a compelling demonstration of this principle. Place three bowls of water in front of you. Put ice water into the left-hand bowl and warm water into the right-hand bowl. The water in the middle bowl should be at room temperature. Immerse your hands in the cold and warm water for about a minute, then dip both in the middle bowl. You will experience the same temperature as heat in one hand and cold in the other. For financial outcomes, the usual reference point is the status quo, but it can also be the outcome that you expect, or perhaps the outcome to which you feel entitled, for example, the raise or bonus that your colleagues receive. Outcomes that are better than the reference points are gains. Below the reference point they are losses.

- A principle of diminishing sensitivity applies to both sensory dimensions and the evaluation of changes of wealth. Turning on a weak light has a large effect in a dark room. The same increment of light may be undetectable in a brightly illuminated room. Similarly, the subjective difference between $900 and $1,000 is much smaller than the difference between $100 and $200.

- The third principle is loss aversion. When directly compared or weighted against each other, losses loom larger than gains. This asymmetry between the power of positive and negative expectations or experiences has an evolutionary history. Organisms that treat threats as more urgent than opportunities have a better chance to survive and reproduce.

The three principles that govern the value of outcomes are illustrated by figure 1 Blth" wagure 0. If prospect theory had a flag, this image would be drawn on it. The graph shows the psychological value of gains and losses, which are the “carriers” of value in prospect theory (unlike Bernoulli’s model, in which states of wealth are the carriers of value). The graph has two distinct parts, to the right and to the left of a neutral reference point. A salient feature is that it is S-shaped, which represents diminishing sensitivity for both gains and losses. Finally, the two curves of the S are not symmetrical. The slope of the function changes abruptly at the reference point: the response to losses is stronger than the response to corresponding gains. This is loss aversion.

Figure 10

Loss Aversion

Many of the options we face in life are “mixed”: there is a risk of loss and an opportunity for gain, and we must decide whether to accept the gamble or reject it. Investors who evaluate a start-up, lawyers who wonder whether to file a lawsuit, wartime generals who consider an offensive, and politicians who must decide whether to run for office all face the possibilities of victory or defeat. For an elementary example of a mixed prospect, examine your reaction to the next question.

Problem 5: You are offered a gamble on the toss of a coin.

If the coin shows tails, you lose $100.

If the coin shows heads, you win $150.

Is this gamble attractive? Would you accept it?

To make this choice, you must balance the psychological benefit of getting $150 against the psychological cost of losing $100. How do you feel about it? Although the expected value of the gamble is obviously positive, because you stand to gain more than you can lose, you probably dislike it—most people do. The rejection of this gamble is an act of System 2, but the critical inputs are emotional responses that are generated by System 1. For most people, the fear of losing $100 is more intense than the hope of gaining $150. We concluded from many such observations that “losses loom larger than gains” and that people are

loss averse

.