I Can Hear You Whisper (17 page)

Read I Can Hear You Whisper Online

Authors: Lydia Denworth

He was able to simplify this a little further, because Bell Labs had also built a voice coder (or vocoder) in which you allowed the base band, the lowest-frequency bandwidth, to do more than its fair share of the work and represent information up to about 1,000 Hz. With this one wider channel doing the heavy lifting on the bottom, Merzenich calculated that he needed a minimum of five narrower bands above it, for a total of six channels. Modern cochlear implants still use only up to twenty-two channelsâreally a child's toy piano compared to natural hearing, which has the range of a Steinway grand. As Merzenich aptly puts it, listening through a cochlear implant is “like playing Chopin with your fist.” It was not going to match the elegance that comes from natural hearing; “it's another class of thing,” he says. But on the other hand, he was beginning to understand that perhaps it didn't need to be the same.

It didn't need to be the same, that was, if the adult brain was capable of change. Merzenich's cochlear implant work had begun to synthesize with his studies of brain plasticity. “It occurred to me about this time, when we began to see these patients that seemed to understand all kinds of stuff in their cochlear implant, that the recovery in speech understanding was a real challenge to how we thought about how the brain represented information to start with,” he says.

â¢Â â¢Â â¢Â

Unlike Merzenich, Donald Eddington was far from an established scientist when he got involved with cochlear implants at the

University of Utah. As an undergraduate studying electrical engineering, he had a job taking care of the monkey colony in an artificial organ laboratory founded by pioneering researchers William Dobelle and Willem Kolff. Since he was hanging around the lab, Eddington began to help with computer programming and decided to do his graduate work there. Dobelle and Kolff were collaborating with the House Ear Institute in Los Angeles. While Bill House was continuing to work on single-channel implants, another Institute surgeon, Derald Brackmann, wanted to develop multichannel cochlear implants. So that's what Don Eddington did for his PhD dissertation.

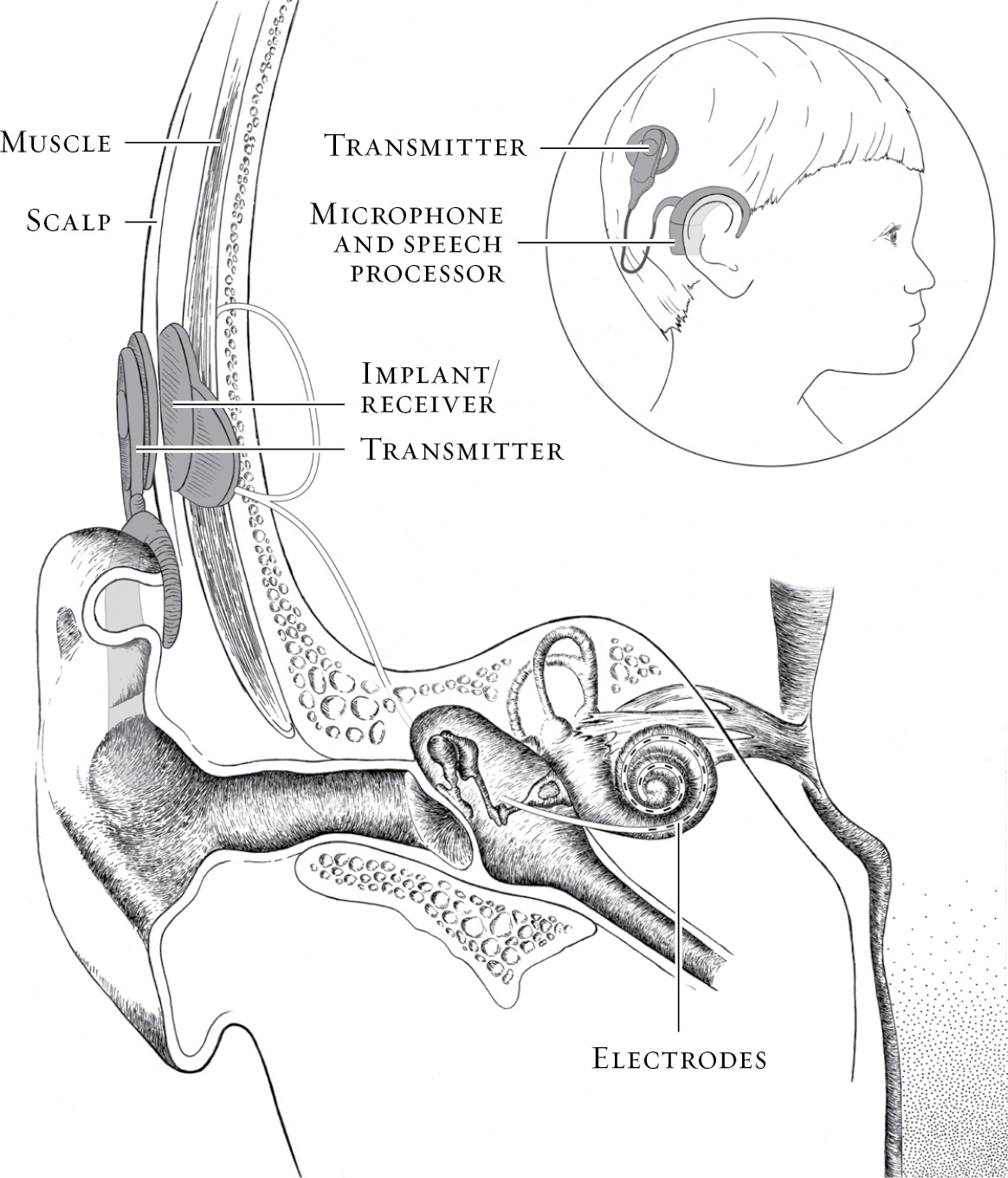

On the question of how to arrange the three basic elementsâmicrophone, electronics, and electrodesâneeded to make an implant work like an artificial cochlea, the groups in Australia and San Francisco had arrived at similar solutions. They implanted the electrodes, kept the microphone outside the head, and divided the necessary electronics in two. The external part of the package included the speech processor. Internally, there was a small electronic unit that served as a relay station, receiving the signal from outside by radio transmission through the skin and then generating an electrical signal to pass along the electrodes to the auditory nerve.

In Utah, they did it differently. Eddington's device, ultimately known as the Ineraid, implanted only the electrodes and brought them out to a dime-size plug behind the ear, just as Bill House's prototypes had done. The downside to this approach was that most people found the visible plug rather Frankenstein-like, and the subjects who worked with Eddington could hear only when they were plugged into the computer in the laboratory. From a scientific point of view, however, the strategy had much to recommend it, because it gave the researchers complete control and flexibility. “The wire lead came out and [attached] to a connector that poked through the skin,” says Eddington, who moved to Massachusetts Institute of Technology in the 1980s. “You could measure any of the electrode characteristics, and you could send any signal you wanted to the electrode.” Rather than extract specific cues from the speech in the processor, as the Australians had, Eddington and his team split the speech signal according to frequency and divided itâall of itâacross six channels arranged from low to high.

â¢Â â¢Â â¢Â

Over the next dozen or so years, these various researchersâsometimes in collaboration but mostly in competitionâworked to overcome all the fundamental issues of electronics, biocompatibility, tissue tolerance, and speech processing that had to be solved before a cochlear implant could reliably go on the market. “To make a long story short, we solved all of these problems,” Merzenich says. “We resolved issues of safety, we saw that we could implant things, we resolved issues of how we could excite the inner ear locally, we created electrode arrays and so forth. Pretty much in parallel, we all created these models by which we could control stimulation in patterned forms that would lead to the representation of speech.”

As researchers began to see success toward the end of the 1970s and into the 1980s, Merzenich was struck by the fact that initially at least, the coding strategies for each group were very different. “There was no way in hell you could say that they were delivering information in the same form to the brain,” he says. “And yet, people basically were resolving it. How the hell can you account for that? I began to understand that the brain was in there, that there was a miracle in play here. Cochlear implants weren't working so well because the engineering was so fabulous, although it's goodâit's still the most impressive device implanted in humans in an engineering sense probably. But fundamentally, it worked so marvelously because God or Mother Nature did it, the brain did it, not because the engineers did it.”

Of course, the engineers like to take credit. “Success has many fathers,” Merzenich jokes. “Failure is an orphan.” Indeed, there are quite a few people who can claimâand doâthe “invention” of the cochlear implant. The group in Vienna, led by the husband and wife team of Ingeborg and Erwin Hochmair, successfully implanted the first multichannel implant the year before Graeme Clark, although they then pursued a single-channel device for a time. Most agree the modern cochlear implant represents a joint effort.

In the 1980s, corporations got involved, providing necessary cash and the ability to manufacture workable devices at scale. After the Food and Drug Administration approved Bill House's single electrode device for use in adults in 1984, the Australian cochlear implant, by then manufactured by Cochlear Corporation, followed a year later, again with

FDA approval only for adults. The San Francisco device was sold to Advanced Bionics, and went on the market a few years later.

The Utah device was sold to a venture capital firm but was never made available commercially. The plug poking through the skin, acknowledges Eddington, was “a huge barrier to overcome,” and the company never did the work necessary to make a fully implantable device. The third cochlear implant available today is sold by a European company called MED-EL, an outgrowth of the Hochmairs' research group.

The inventors had proved the principle. After it okayed the first implant, the FDA noted that “

for the first time a device can, to a degree, replace an organ of the human senses.” Many shared Mike Merzenich's view that the achievement was nearly miraculous.

But there was a group who did not. It was one thing to provide a signal for adults who had once had hearing. A child born deaf was differentâscientifically, ethically, and perhaps even culturally. In 1990, the FDA approved the Australian implant for use in children as young as two, and a new battle began. If the inventors of cochlear implants thought they had a fight on their hands getting this far within the scientific community, it was nothing compared to what awaited in the Deaf community when attention turned to implanting children. The very group that all the surgeons and scientists had intended to benefitâthe deafânow began to argue that the cochlear implant was not a miracle but a menace.

S

URGERY

I

n the predawn darkness of a December morning, I watched Alex sleeping in his crib for a moment and gently ran my fingers along the side of his sweet head just above his ear. In a few hours, that spot would be forever changed by a piece of hardware. Glancing out the window, I saw that Mark had the car waiting, so I scooped Alex up, wrapped him in a blanket against the cold, and carried him outside.

The testing was done. Our decision had been made and it had not been a difficult one for us. As I once told a cochlear implant surgeon, “You had me at hello.” Everything about Alex suggested he had a good chance at success. The surgery had been scheduled quickly, just a week or so after our meeting with Dr. Parisier. There was nothing left to do but drive to the hospital down the hushed city streets, where the holiday lights and a blow-up snowman on the corner struck an incongruously cheerful note. Alex pointed to the snowman and smiled sleepily.

Although I knew the risks, surgery didn't scare me unduly. In my family, doctors had always been the good guys. After a traumatic brain injury, my brother's life was saved by a very talented neurosurgeon. Another neurosurgeon had released my mother from years of pain caused by a facial nerve disorder called trigeminal neuralgia. There'd been other incidentsâserious and less seriousâall of them adding up to a view of medicine as a force for good.

That didn't make it easy to surrender my child to the team of green-gowned strangers waiting under the cold fluorescent light of the operating room. I was allowed to hold him until he was unconscious. As if he could make himself disappear, Alex buried his small face in my chest and clung to me like one of those clip-on koala toys I had as a child. I had to pry him off and cradle him so that they could put the anesthesia mask on him. When he was out, I was escorted back to where Mark waited in an anteroom. For a time, the two of us sat there, dressed in surgical gowns, booties, and caps, tensely holding hands.

“You'll have to wait upstairs,” said a nurse finally, kindly but firmly. “It'll be a few hours.”

Though I wasn't there,

I know generally how the surgery went. After a little bit of Alex's brown hair was shaved away, Dr. Parisier made an incision like an upside-down question mark around his right ear, then peeled back the skin. He drilled a hole in the mastoid bone to make a seat for the receiver/stimulator (the internal electronics of the implant) and to be able to reach the inner ear. We had chosen the Australian company, Cochlear, that grew out of Graeme Clark's work. Parisier threaded the electrodes of Cochlear's Nucleus Freedom device into Alex's cochlea and tested them during surgery to be sure they were working. So as to avoid an extra surgery, Parisier also replaced the tubes that Alex had received nearly a year earlier, which added some time to the operation. Then he sutured Alex's skin back over the mastoid bone, leaving the implant in place.

The internal implant looks surprisingly simple. But, of course, the simpler it is, the less can go wrong. It has no exposed hard edges. There's a flat round magnet that looks like a lithium battery in a camera. It is laminated between two round pieces of silicone and attached to the receiver/stimulator, the size of a dollar coin. Then a pair of wires banded with electrodes extends from the plastic circle like a thin, wispy, curly tail.

More than four hours after the surgery began, Alex was returned to us in the recovery room with a big white bandage blooming from the right side of his head. As he had been all through the previous year of testing and travail, he was a trooper. He didn't cry much. He didn't even get sick from the anesthesia, as if somehow he had decided to do this with as little fuss as possible. When he was fully awake, we took him upstairs to the bed he'd been assigned, where I could lie down with him. It was a few more hours before they let us go home, but finally I wrapped him in his blanket and carried him back into the car, which once again Mark had waiting at the curb.

It was done.

ART

T

WO

OUND

F

LIPPING THE

S

WITCH

W

hat did you do today?” I asked.

It was late on a March afternoon, one month before Alex's third birthday. Matthew, Alex, and I were sitting at the table having a snack. Immediately, Matthew launched into a detailed recitation of a day in the life of a four-year-old preschooler.

“We played tag in the yard and I was it and I chased Miles and we had Goldfish for snack and I made a letter âB' with beans and . . .” Matthew loved to talk. Words poured out of him like water. When he stopped for a breath, I turned to Alex, sitting to my left.

“Alex, what about you?”

I didn't expect an answer, but I always included him. This time, he surprised me.

“Pay car,” he said.

“Pay car. Pay car,” I murmured to myself, trying to figure out what he was saying. “You played cars?”

“Pay car,” he repeated and added, “fren.”

“With friends?” I asked. “Which friends?”

“Ma. A-den.”

“Max? Aidan? You played cars with Max and Aidan,” I exclaimed. “That's great.”

Then Alex began to sing.

“Sun, Sun . . . down me.”

As he sang, he held his hands to form a circle and arced it over his head like the sun moving across the sky.

It was a very shaky but recognizable version of the “Mr. Sun” song, complete with hand movements, which his teachers often sang on rainy days.

I sang it back to him.

“Mr. Sun, Sun, Mr. Golden Sun, please shine down on me!”

His face brightened as he made the sun rise and set one more time.

We're talking! I realized. Alex and I are having a conversation!

â¢Â â¢Â â¢Â

Two months earlier, in the middle of January, four weeks after Alex's surgeryâenough time for the swelling to go down and his wound to healâwe had visited the audiologist to have his implant turned on, a process called activation. It means adding external components and flipping the switch.

Although the entire device is called a cochlear implant, only part of it is actually implanted, the internal piece that receives instructions from the processor and stimulates the electrodes that surgeons place in the inner ear. On its own, that piece does nothing. Like a dormant seed that needs water and sunlight to germinate, it waits for a signal. Producing that signal is the job of the external piece, which contains the microphones, battery pack, and most of the electronics. This outer piece has evolved from a bulky body-worn case toâin its smallest iterationâa behind-the-ear device not much bigger than a hearing aid. The intention was always to keep the internal components as simple as possible so that technology upgrades could be kept to the outside.

Alex and I sat down with the audiologists we'd been assigned, Lisa Goldin and Sabrina Vitulano. They had a big box from Cochlear waiting for us with the external parts of the implant nestled inside in thick black protective foam. Because Alex was young and his ears were small, we were going to start by keeping the battery pack and processor in a separate unit, about the size of a small cigarette case, in a cloth pocket that pinned to his shirt. A cable would run from there to the much smaller piece on his ear, and a second cable would lead to the plastic coil and magnet on his head. When he got bigger, we would be able to switch to a battery pack that attached to the behind-the-ear processor and would do away with the pocket and extra cable. Lisa started by attaching Alex's processor to her computer directly with an external cable. She then hooked the microphone behind his ear, though it hadn't yet been turned on, and placed the magnetic coil on his head.

“You try,” she said, removing the coil and handing it to me.

Tentatively, I held the little piece of round flesh-colored plastic above his right ear. As soon as my hand drew near the internal magnet under his skin, the coil fell silently but energetically into position. How odd.

A snail-shaped outline appeared on Lisa's screen, a representation of Alex's cochlea and the electrodes that now snaked through it. Lisa clicked her mouse and a line of dots flashed on, one by one, along the curled inside of the snail. Each electrode was meant to line up with a physical spot in Alex's ear along the basilar membrane, so the electrodes were assigned different frequencies with high pitches at the base of the cochlea and low ones at the apex. The farther the electrodes are inserted, which depends on the patient's physiology and the surgeon's skill, the wider the range of frequencies that patient should hear.

“It's working,” Lisa said. She had sent a signal to the internal components and they were functioning properly.

Next, Lisa pulled up a screen that showed each of the twenty-two electrodes that had been implanted inside Alex's cochlea in bar graph form. Two additional electrodes outside the cochlea would serve as grounds, creating a return path for the current. Lisa was going to set limits to the stimulation each electrode would receiveâin effect, she was deciding how wide his window of sound should be. The process was known as MAPping, for Measurable Auditory Percept, and this was to be the first of many such sessions. At the bottom was the threshold, or T-level, the least amount of electrical stimulation that Alex could hear, and at the top was an upper limit known as the C-level, for “comfort” or “clinical,” which would equal the loudest sound Alex could tolerate.

I stared at the computer screen, where each electrode showed up as a long, thin, gray rectangle. The sweet spot, the window of sound between the threshold and comfort levels, was depicted in canary yellow. Lisa could expand or compress the signal by clicking on the T or C level. Right there, in those narrow strips, was all the auditory experience of the world Alex was going to get in this ear.

We all looked at him. His eyes had widened.

“Can you hear that?” Lisa asked.

He nodded tentatively. Since Bill House's film of the young deaf woman Karen receiving her cochlear implant in the 1970s, there have been lots of videos of implant activations. YouTube has more than 37,000. Of the many I've seen, a few stand out. One ten-year-old girl burst into happy tears and clung to her grandmother, who, through her own tears, kept saying, “We did it, baby.” A baby boy, lying in his mother's arms and sucking a pacifier, suddenly stared at his mother's face. His mouth dropped open in amazement, and the pacifier fell out.

Alex stayed true to his quiet, watchful nature. He stared at Lisa and then at me; his expression carried hints of surprise and uncertainty, but nothing more. The only drama was in my gut, tense with anxiety and hope. “It's working,” I repeated to myself. Anticlimactic was okay, as long as it was working.

Because he was hooked up to the computer, I couldn't hear what Alex was hearingâhe was having a private electronic conversation of clicks and tones. But he was responding. Sabrina had stacked toys on the table and was holding a basket. Just as in the sound booth, Alex was encouraged to throw a toy into the basket each time he heard anything. Kids, especially young ones, don't always report the softest sounds, so there's some art to assessing their responses. Once Lisa had set the levels to her satisfaction, she activated the microphone. Spinning her desk chair to face Alex, she ran through some spoken language testing, beginning with a series of consonants known as the Ling sounds. Developed by speech pathologist

Daniel Ling, this is a test using six phonemes that encompass most of the necessary range of frequencies for understanding speech. Therapists, teachers, and parents use them as a quick gauge of how well a child is hearing.

“Mmmm.” Lisa began with the low-frequency “m” sound, without which it's difficult to speak with normal rhythm or without vowel errors.

“Mmmm,” Alex repeated. He had done this test many times at Clarke by this point.

“Oo,” she said.

“Oo.” He repeated the low-frequency vowel sound.

Then Lisa moved through progressively higher-frequency sounds. “Ee” has low- and high-frequency information. “Ah” is right in the middle of the speech spectrum. “Sh” is moderately high.

“Sss,” she hissed finally. The very high-frequency “s” had been particularly hard for Alex to hear.

“Sss,” he said quietly.

“It's working,” she said again. “See how he responds over the next few days, and if he seems uncomfortable, let me know.”

I put the fancy white Cochlear box, as big as a briefcase and complete with a handle, in the bottom of Alex's stroller. It contained a multitude of technological marvels: spare cables of various sizes, microphone covers, monitor earphones for me to check the equipment myself, cables for connecting to electronics, boxes of high-powered batteries. I thought back to my moment of ineptitude standing on the sidewalk in Brooklyn with Alex's brand-new, tangled hearing aids. That had been nine months earlier, and it was amateur hour compared to this.

“It will take a while,” was the last thing Lisa told me as Alex and I left her office.

“It will take a while,” Dr. Parisier had said when we saw us after the surgery. “His brain has to learn to make sense of what it's hearing.”

Parisier had told us to use only the cochlear implant without the hearing aid in the other ear for a few weeks while Alex adjusted. But before too long, he wanted us to put the hearing aid back in the left ear.

“The more sound the better,” said Parisier.

“Won't the two sides sound different?” I asked.

“They will,” he said. “But he is two years old. I think his brain will be able to adjust. He will make sense of what we give him, and it will be normal for him.”

When I reported Dr. Parisier's instructions about the hearing aid to Alex's teachers at Clarke, I realized just what unfamiliar territory we were in.

“He said that?” said one, a note of incredulity in her tone. “No one does that.”

“They don't?”

She was not quite right, it turned out, but when I investigated, I could understand why she said it. Very few people were using both a hearing aid and a cochlear implant at that time. One reason was that in the early years, you had to be profoundly deaf in both ears to qualify for a cochlear implant. Most people who were eligible didn't have enough hearing in the other ear to get any benefit from a hearing aid. By 2006, when Alex began using his implant, that line of eligibility was shifting slightly. In the next few years, “

bimodal” hearingâelectrical in one ear, acoustic in the otherâwould become increasingly common and a major area of research. But I suspect Dr. Parisier's instructions for Alex were based more on instinct than science.

Sitting in the office at Clarke that morning, I realized that even though children had been receiving cochlear implants for more than fifteen years by then, Alex was still on the cutting edge of science. He did have more hearing in his left ear than was usual, at least for the moment. As long as that was true, it made sense to try to get the most out of that ear. But what would the world sound like to him?

We tried to damp down our expectations, but we didn't have to wait long to see results. On the day after Lisa turned on the implant, I was playing with the boys before bedtime. We had lined up their stuffed animals and were pretending to have a party.

“Would you like some cake?” I asked a big brown bear.

“Cake!” said Alex, clear as a bell. The “k” sounds in cake contain a lot of high frequencies. He had never actually spoken them before. Even Matty, at four, knew something important had just happened.

“He said âcake'!” he exclaimed.

The following day, as I drove Alex up FDR Drive along the east side of Manhattan to Clarke, I let him watch

Blue's Clues

on our minivan's video system. “Mailbox!” I heard him call out in response to the prompt from the show's host. I spun around to look at him and narrowly avoided sideswiping a yellow cab.

“What did you say?!” He pointed at the screen and repeated “mailbox,” though it sounded more like “may-bos.” Of course, the show was designed to prompt him to say “mailbox.” Jake and Matthew had done that. But Alex never had, despite watching that same

Blue's Clues

video many, many times.

Two months later, he was able to tell me about playing cars with Max and Aidan complete with the “k” sound in “cars.”

We had years of work ahead of us. Just keeping the processor on the head of an active two-year-old was a challenge. But something was fundamentally different.