I Can Hear You Whisper (25 page)

Read I Can Hear You Whisper Online

Authors: Lydia Denworth

On the other hand, Marschark, who has long been a proponent of ASL for deaf kids, has dared to say lately that much of the argument for the benefits of bilingualism is based on emotion more than evidence. When we met, he was about to publish a paper that “was going to make people mad.” It argued that, so far, no one had shown convincingly that bilingual programs were working. “There is zero published evidence, no matter what anybody tells you, that it makes anybody fluent in either language.”

In the earlier edition of

Raising and Educating a Deaf Child

, published in 1997, Marschark was circumspect about cochlear implants. In the 2007 version, he supports them. What changed? “There wasn't any evidence and now there is,” he told me. “There are things I believed three years ago that I don't believe. It doesn't mean anybody's wrong; it just means we're learning more. That's what science is about.” One of Marschark's favorite lines is: “Don't believe everything you read, even if I wrote it.”

“So,” I ask him, “what do we know?”

What we know, he says, is that it's all about the brain. “Deaf children are not hearing children who can't hear; there are subtle cognitive differences between the two groups,” he says, differences that develop based on experience. Deaf and hard-of-hearing babies quickly learn to pay attention to the visual worldâthe facial movements of their caregivers, their gestures, and the direction of their gaze. “It's unclear how that visual learning proceeds, but their visual processing skills develop differently than for hearing babies, for whom sights and sounds are connected.”

Marschark and his NTID colleague Peter Hauser have compiled two books on the subject, one called

How Deaf Children Learn

and a more academic collection,

Deaf Cognition

. The newly recognized differences affect areas like visual attention, memory, and executive function, the umbrella term for the cognitive control we exert on our own brains. Marschark and Hauser stress these are differences, not deficiencies. Understanding those differences, they argue, just may tell us what we need to do to finally improve the results of deaf education. “

We have to consider the interactions between experience, language, and learning,” they write.

Such differences would seem to be obviously true of deaf children with deaf parentsâand they are. Neuroscientist Daphne Bavelier worked for years with Helen Neville before heading her own lab at the University of Rochester. Bavelier, together with Peter Hauser, who was a postdoctoral fellow in her lab, has continued Neville's work on visual attention. Among other things, she has shown that deaf children have a better memory for visuospatial information and that they attend better to the periphery and can shift attention more quickly than hearing students. The ability is probably adaptive, as it helps them notice possible sources of danger, other individuals who seek their attention, and images or events in the environment that lead to incidental learning. This skill may have a downside, however, because it also makes students more distractible.

Bavelier and others study deaf children of deaf parents, since those kids receive little or no sound stimulation and they learn ASL from birth. Such a “pure” population makes for cleaner research as it limits the variables that could affect results, and it could be said to show what's possible in an optimal sign language environment. But it also limits the relevance of such work. “Deaf of deaf” are a minority within a minority. The other 95 percent of deaf and hard-of-hearing children are more like Alexâborn to hearing parents and falling somewhere along a continuum in terms of sound and language exposure, depending on when a hearing loss is identified, what technology (if any) is used, and how their parents choose to communicate. One thing that no one can control is who your parents are.

Children with cochlear implants are another minority within a minorityâthough they may not be a minority for long. Research into their cognitive differences has barely begun, but what there is sits squarely at the intersection of technology, neuroscience, and education. “No part of the brain, even for sensory systems like vision and hearing, ever functions in isolation without multiple connections and linkages to other parts of the brain and nervous system,” notes David Pisoni, a contributor to

Deaf Cognition

. “Deaf kids with cochlear implants are a unique population that allows us to study brain plasticity and reorganization after a period of auditory deprivation and language delay.” He believes differences in cognitive processing may offer new explanations for why some children do so much better with implants than others.

To that end, Pisoni and his colleagues designed a series of studies not to show how accurately a child hears or what percentage of sentences he can repeat correctly, but to try to assess the underlying processes he used to get there. In one set of tests, researchers gave kids lists of digits. The ability to repeat a set of numbers in the same order it's heardâ1, 2, 3, 4 or 3, 7, 13, 17, for exampleârelates to phonological processing ability and what psychologists call rehearsal mechanisms. Doing it backward is thought to reflect executive function skills. Even when they succeeded, which they usually did, children with cochlear implants were three times slower than hearing children in recalling the numbers. Looking for the source of the differences in performance, Pisoni zeroed in on the speed of the verbal process in working memory, essentially the inner voice making notes on the brain's scratch pad. From these and other studies, he concluded that some brain reorganization has already taken place before implantation and that basic information processing skills account for who does well and who doesn't with an implant. Those with automated phonological processing and strong cognitive control are more likely to do better.

For his part, Marschark is particularly interested these days in “language comprehension,” a student's ability to understand what he hears or reads and to recognize when he doesn't get it. Marschark tested this by asking deaf college students to repeat one-sentence questions to one another from Trivial Pursuit cards. Those who communicated orally understood just under half of the time. Those who signed got only 63 percent right. All were encouraged to ask for clarification if necessary, but they rarely did so. Whether they were overly confident or overly shy, the consequences are the same. These kids are missing one-third to one-half of what is said. You can't fill in gaps or ask for clarification unless you know the gaps and misunderstandings exist; and you have to be brave enough to speak up if you do know you missed something.

Hauser has been exploring the connection between language fluency and the development of executive function skills. “Executive function is responsible for the coordination of one's linguistic, cognitive, and social skills,” he says. The fact that many deaf children show delays in age-appropriate language means they may also be delayed in executive function. Too much structure or overprotectivenessâsomething parents of deaf kids are prone toâcompounds the problem by further stifling the development of executive function skills. So far in the study, deaf of deaf are on par with hearing children, “so being deaf itself isn't causing the delay,” Hauser says. When we met, he was starting to collect data on deaf children with hearing parents.

If researchers can continue to pin down such cognitive differences, says Marschark, they might be able to pass that useful knowledge along to teachers. “Bottle them and teach them in teacher training programs,” he suggests. “Here's how you can offset the weaknesses, here's how you can build on the strengths.”

“That would be great,” I acknowledge.

I'd also been struck, however, by how much keeping up with research on deaf education feels like following a breaking news story on the Internet. It leaps in seemingly conflicting directions simultaneously. Furthermore, not all of it feels relevant to Alex.

“Absolutely,” Marschark agrees. “Kids today are not the kids of twenty years ago or ten years ago or even five years ago. Science changes, education changes, andâmost importantâthe kids change. And one of the problems is that we as teachers do not change to keep up with them.”

The one constant, he points out, is that they are all still deaf, and no oneânot deaf of deaf or children with cochlear implants or anyone in betweenâshould be considered immune from these cognitive differences. Those with implants, he says, will miss some information, misunderstand some, and depend on vision to a greater extent than hearing children. (The latter is a good thing because using vision and hearing together has been shown to consistently improve performance.) As Marschark and Hauser asked rhetorically in

Deaf Cognition

: “

Are there any deaf children for whom language is not an issue?”

A P

ARTS

L

IST OF THE

M

IND

D

avid Poeppel is pulling books off the groaning shelves in his office at New York University. I've come back to see him to talk about language, the other half of his work.

“Open any of my textbooks,” he says, holding one up. “Why is it that any chapter or image says there are two blobs in the brain and that's supposed to be our neurological understanding of a very complex neurological, cognitive, and perceptual function?”

What he is objecting to is the stubborn persistence of a particular model depicting how language works in the brain. “We're talking about

Broca's area and Wernicke's area,” he says. “It was a very, very influential model, one of the most influential ever. But notice . . . It's from 1885.” He laughs ruefully. “It's just embarrassing.”

In the 1800s, the major debate among those who thought about the brain was whether functions were localized in the brain or whether the brain was “essentially one big mush,” as Poeppel puts it. The evidence for the mush theory came from a French physiologist named Jean Pierre Flourens. “He kept slicing off little pieces of chicken brain and the chicken still did chicken-type stuff, so he said it doesn't matter,” says Poeppel.

Then along came poor

Phineas Gage. In 1848, Gage was supervising a crew cutting a railroad bed in Vermont. An explosion caused a tamping iron to burst through his left cheek, into his brain, and out through the top of his skull. Amazingly, Gage survived and became one of the most famous case studies in neuroscience. He was blinded in the left eye, but something else happened, too.

“Here's a case where a very specific part of the brain was damaged,” explains Poeppel. “He changed from a churchgoing, very Republicanesque kind of guy to essentially a frat boy: a capricious, hypersexual person. Why is that result important? Because it's about functional localization. It really said when you do something to the brain, you affect the mind in a particular way, not in an all-out way. And that remains one of the main things in neuroscience and neuropsychology. People want to know where stuff is.”

It was a few years after Gage's accident that Pierre Paul Broca, a French physician who wanted to know where stuff was, or more precisely whether it mattered, met his own famous case study. The patient, whose name was Monsieur Leborgne but who was known as Tan, had progressively lost the ability to speak until the only word he could say was “tan.” Yet he could still comprehend language. Tan died shortly after Broca first examined him. After performing an autopsy and discovering a lesion on the left side of the brain, Broca concluded that the affected area was responsible for speech production, thereby becoming the first scientist to clearly label the function of a particular part of the brain. A few years later, German physician Carl Wernicke found a lesion in a different area when he studied the brain of a man who could speak but made no sense. The conclusion was that this man couldn't comprehend the world because of his lesion and therefore that that area governed speech perception. From these two patientsâone with an output disorder, the other with an input disorderâa model was born.

“The idea is intuitively very pleasing,” says Poeppel. “What do we know about communication? We say things and we hear things. So there must be a production chunk of the brain and a comprehension chunk of the brain.”

Poeppel turns to his computer screen.

“Here's vision.”

He pulls up a map of a brain's visual systemâactually

a macaque monkey's visual system, which is very similar to that of a human. Multicolored, multilayered, a jumble of boxes and interconnecting lines, the map is nearly as complex as a wiring diagram for a silicon chip.

“Here's hearing.”

Up pops

a map of the auditory system. A little less complicated, it nonetheless has no fewer than a dozen stages and several layers and calls to mind a map of the New York City subway.

“And here's language.”

Up comes the familiar image, the same one that was in so many of the offending textbooks he has pulled off the shelf, showing the left hemisphere of the brain with a circle toward the front marked as Broca's area and a circle toward the back marked as Wernicke's area, with some shading in between and above.

“Really?” says Poeppel. “You think language is reducible to just production and perception. That seems wildly optimistic. It's not plausible.”

The old model persisted because those who thought about neurology didn't usually talk to those who thought about the nooks and crannies of language and how it worked. A reason to have that conversationâto go to your colleague two buildings down, as Poeppel puts itâis that perhaps we can look to the brain to learn something about how language works, but it's also possible that we can use language to learn something about how the brain works.

Poeppel's goal is to create “an inventory of the mind.” His approach to studying language is summed up in a photograph he likes to show at conferences of a dismantled car with all its components neatly lined up on the floor. “What we really have to do is think like a bunch of guys in a garage,” he says. “Our job is to take the thing apart and figure out: What are the parts?”

He began by coming up with a new model. Poeppel and his colleague Greg Hickok folded together all that had been learned in the previous hundred years through old-fashioned studies of deficits and lesions and through newfangled imaging research. “

I say to you âcat.' What happens?” asks Poeppel. “We now know. . . . You begin by analyzing the sound you heard, then you extract from that something about speech attributes, you translate it into a kind of code, you recognize the word by looking it up in the dictionary in your head, you do a little brain networking and put things together, you say the word.”

First published in 2000 and then refined, their model applies an existing idea about brain organization to language. It is now widely accepted that in both vision and hearing, the brain divides the labor required to make sense of incoming information into two streams that flow through separate networks. Because those networks flow along the back and belly of the brain, they are called dorsal and ventral streams. Imagine routing the electrical current in a house through both the basement and the attic, with one circuit powering the lights and the other the appliances. In vision, the lower, ventral stream handles the details of shape and color necessary for object recognition and is therefore known as the “what” pathway. The upper, dorsal stream is the “where/how” pathway, which helps us find objects in space and guides our movement. In hearing, there's less agreement on the role of the two streams, but one argument is that they concern identifying sounds versus locating them.

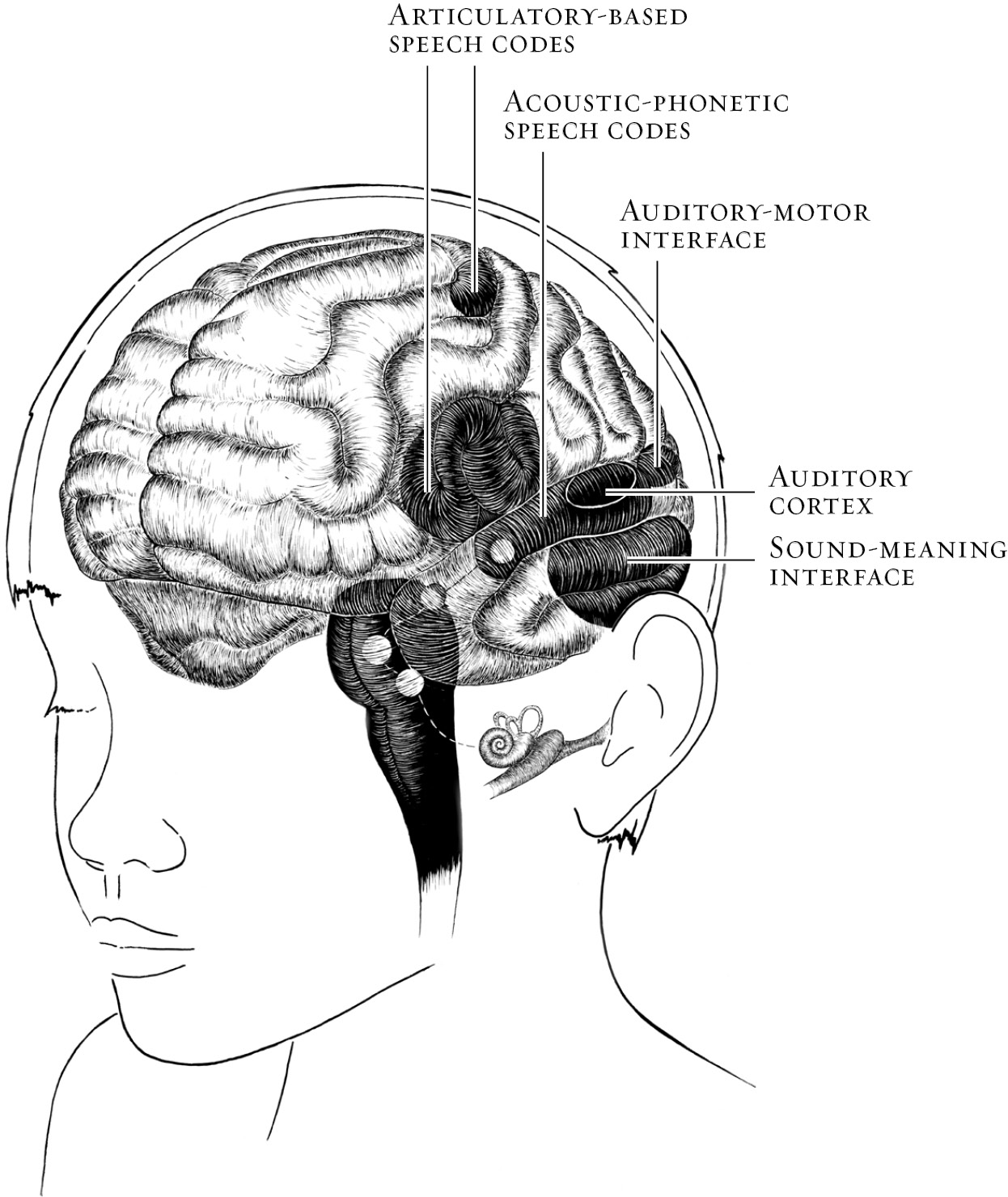

Hickok and Poeppel's model suggests that this same basic dual-stream principle also governs neuronal responses for language and may even be a basic rule of brain physiology. “There are two things the brain needs to do to represent speech information in the brain,” Hickok explains. “It has to understand the words it's hearing and has to be able to reproduce them. Those are two different computational paths.” In their view, the ventral stream is where sound is mapped onto meaning. The dorsal stream, running along the top of the brain, handles articulation or motor processes. Graphically, they represent their model as seven boxes connected by arrows moving in both directions, since information feeds forward and backward. Labeling each box a network or interfaceâarticulatory, sensorimotor, phonological, sound analysis, lexical, combinatorial, and conceptualâemphasizes the interconnectedness of the processing, the fact that these are circuits we're talking about, not single locations with single functions. Some change jobs over time. The spot where sensory information combines with motor processing helps children learn language but is also the area where adults maintain the ability to learn new vocabulary. That same area requires constant stimulation to do its job: It's not only children who use hearing to develop language; adults who lose their hearing eventually suffer a decline in the clarity of their speech if they do not use hearing aids or cochlear implants.

The model also challenges the conventional belief that language processing is concentrated on the left side of the brain. “You start reading and thinking about this more and you think: Can that be right?” says Poeppel. When he and Hickok began looking at images from PET and fMRI, they saw a very different pattern from lesion data alone. “The textbook is telling me I'm supposed to find this blob over here, but every time I look I find the blobs on both sides. What's up with that?” says Poeppel. “Now it's actually the textbook model that the comprehension system is absolutely bilateral. It's the production system that seems more lateralized. You have to look more fine-grained at which computations, which operations, what exactly about the language system is lateralized.”

It was a bold step for Poeppel and Hickok to take on such ingrained thinking. Although it initially came in for attack, their model has gained stature as pretty much the best idea going. “At least it's the most cited,” says Poeppel. “It may be wrong. . . . No, it's absolutely for sure wrong, because how could it possibly not be way more complicated than a bunch of colored boxes?” But at the moment, the model has achieved the enviable position of being the one up-and-coming neuroscientists learn and then try to take apart.

I even found it in a new textbook.

â¢Â â¢Â â¢Â

Notice that this model does not just locate “language,” which, despite how we often talk about it, is not one monolithic thing. Nor does it limit itself to perception and production. Instead, the model's organization parallels the organization of language, which consists of elements like sound, meaning, and grammar that work together to create what we know as English or Chinese.

Each of those linguistic tasks, or subsystems, turns out to involve a different network of neurons.

Phonology is the sound structure of language, though ASL is now thought to have a phonology, too, one that consists of handshapes, movements, and orientation. “During the first year of life, what you acquire is your phonetic repertoire,” says Poeppel, referring to the forty phonemes often identified in English. “If I'm English, I have this many vowels; if I'm Swedish, I have nearly twice as many; that is my inventory to work withâno more, no less. That's purely experience-dependent, because you don't know what language you're going to grow up with.” One thing Poeppel and Hickok observed was that the ability to comprehend words and the ability to perform more basic tasks like identifying phonemes or recognizing rhyming patterns seemed to be separate. This was an important observation because it meant that phonemic discrimination might not be required to understand the meaning of words. Their model puts the phonological network roughly where Wernicke's area is, in the posterior portion of the superior temporal gyrus. As for Broca's area, which is part of the inferior frontal gyrus, they and many others now think of it as a region that handles as many as twelve different language-related processes.

Morphology refers to the fact that words have internal structureâthat “unbelievable” is made up of “un-” and “believe” and “-able,” and maybe “believe” has some parts as well. It turns out there's a heated academic debate between psychologists and linguists about whether morphology even existsâ“excruciatingly boring for nonexperts, but interesting for those of us who nerd out about these things,” says Poeppel. Morphology is likely processed, says Poeppel, “in the interplay between the temporal and inferior frontal regions.”

The same is true of syntax, the grammar of languageâstructure at the level of a sentence. Here there is no argument: You have to have sentence structure for language to make sense. It's what tells us who did what to whom, whether man bites dog or dog bites man.

Semantics concerns the meaning of words, individually and in context in a sentence. They are not the same. Even if you know the different meanings of “easy” and “eager,” the brain has to do some extra work to make sense of the difference between the following two sentences where the structure is seemingly identical:

John is easy to please.

John is eager to please.

They differ by exactly one syllable, by one tiny sound sequence, but they mean something entirely different for John. He's either the pleaser or the pleasee. A fluent user of language understands that effortlessly. Lexical ability, too, is located in the ventral stream, specifically in the posterior middle temporal gyrus and the posterior inferior temporal sulcus.

Prosody is the rhythm of speech, the contour of our intonations, and to Poeppel its importance is clear. “How is it that you can distinguish subtle inflections at the end of the sentence and know that it's a question?” says Poeppel. “Syntax doesn't tell you that. This small change in acoustics changes the interpretation completely.” It is also a cue for prominence, according to

Janet Werker, a developmental psychologist at the University of British Columbia and colleague of Athena Vouloumanos. In seven-month-old bilingual babies, Werker found that the infants were using prosody (pitch, duration, and loudness) to solve the challenging task of learning both English and Japanese, languages that do not follow the same word order (“eat an apple” in English versus “apple eat” in Japanese). Babies cleverly listen for where the stress is placed in a sentence. Prosody, it seems, plays a part in how you break the sound stream into usable units. That process begins in utero, where a baby can't hear all the specific sounds of his mother's voice but can follow the rhythm of her language. Its location in the brain is similar to that of phonology.