Pro Oracle Database 11g Administration (54 page)

For example, if you’re exporting a schema, a table is created in your account with the name SYS_EXPORT_SCHEMA_NN, where NN is a number that makes the table name unique in the user’s schema.

This status table contains information such as the objects exported/imported, start time, elapsed time, rows, error count, and so on. The status table has over 80 columns.

The status table is dropped by Data Pump upon successful completion of an export or import job. If you use the KILL_JOB interactive command, the master table is also dropped. If you stop a job with the STOP_JOB interactive command, the table isn’t removed and is used in the event you restart the job.

If your job terminates abnormally, the master table is retained. You can delete the status table if you don’t plan to restart the job.

308

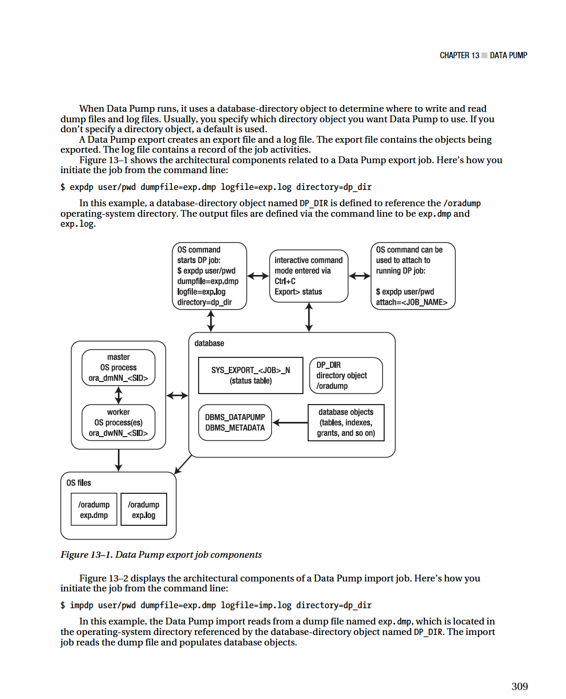

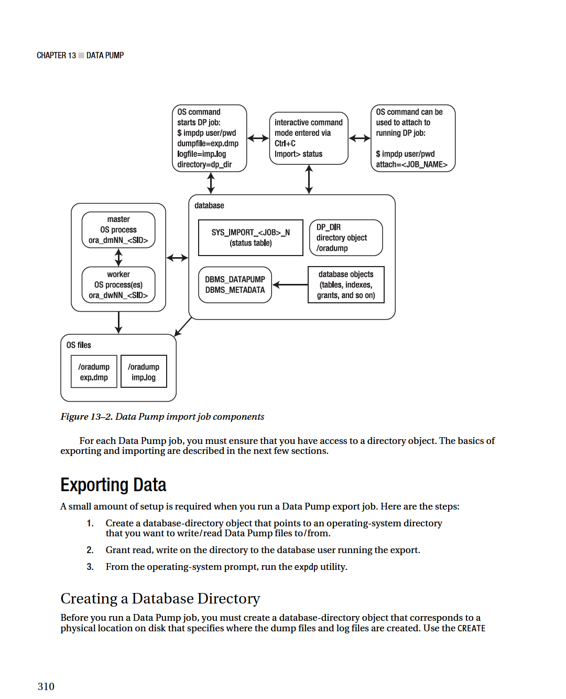

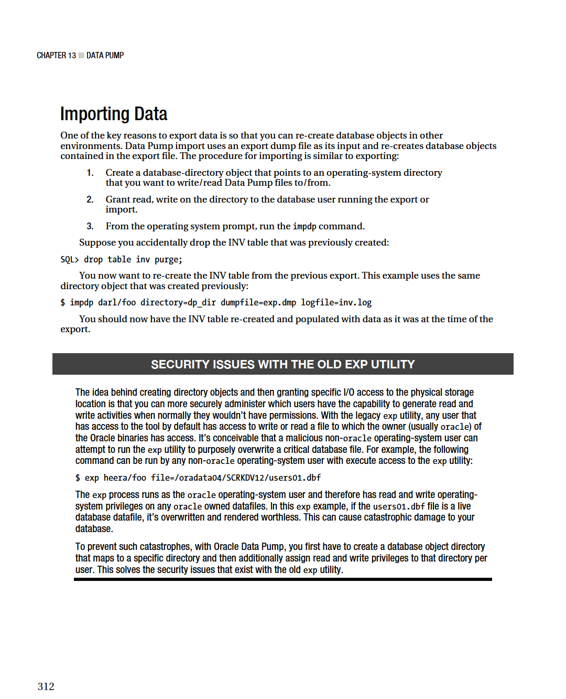

CHAPTER 13 ■ DATA PUMP

DIRECTORY command to accomplish this. This example creates a directory named dp_dir and specifies that it is to map to the /oradump physical location on disk:

SQL> create directory dp_dir as '/oradump';

To view the details of the newly created directory, issue this query: SQL> select owner, directory_name, directory_path from dba_directories; By default, when you install Oracle, one default directory object is created named DATA_PUMP_DIR. If you don’t specify the DIRECTORY parameter when exporting or importing, Oracle by default attempts to use the default database-directory object. The default directory associated with DATA_PUMP_DIR can vary depending on the version of Oracle. On some systems, it may be ORACLE_HOME/rdbms/log; on other systems, it may point to ORACLE_BASE/admin/ORACLE_SID/dpdump. You have to inspect DBA_DIRECTORIES to verify the default location for your system.

Granting Access to the Directory

You need to grant permissions on the database-directory object to a user that wants to use Data Pump.

Use the GRANT statement to allocate the appropriate privileges. If you want a user to be able to read from and write to the directory, you must grant security access as follows: SQL> grant read, write on directory dp_dir to darl;

All directory objects are owned by the SYS user. If you’re using a user account that has the DBA role granted to it, then you have the read and write privileges on the directory object. I usually perform Data Pump jobs with a user that has DBA granted to it (so I don’t need to bother with granting access).

Taking an Export

When the directory object and grants are in place, you can use Data Pump to export information from a database. DBAs typically use exports for point-in-time backups of data and metadata. You can use these exports to either restore database objects or move data to different database environments. Suppose you recently created a table and populated it with data:

SQL> create table inv(inv_id number);

SQL> insert into inv values (123);

Now, you want to export the table. This example uses the previously created directory named DP_DIR. Data Pump uses the directory path specified by the directory object as the location on disk to write the dump file and log file:

$ expdp darl/foo directory=dp_dir tables=inv dumpfile=exp.dmp logfile=exp.log The expdp job creates a file named exp.dmp in the /oradump directory that contains the information required to re-create the INV table and populate it with data as of the time the export was taken. In addition, a log file named exp.log is created in the /oradump directory that contains all the logging information associated with this export job.

If you don’t specify a dump-file name, Data Pump creates a file named expdat.dmp. If a file named expdat.dmp already exists in the directory, then Data Pump throws an error. If you don’t specify a log-file name, then Data Pump creates one named export.log. If a file already exists (named export.log), then Data Pump overwrites it.

311

CHAPTER 13 ■ DATA PUMP

Interactive Command Mode

Data Pump provides an interactive command mode that allows you to monitor the status of a Data Pump job and modify on-the-fly a number of job characteristics. The interactive command mode is most useful for long-running Data Pump operations. In this mode, you can also stop, re-start, or terminate a currently running job. Each of these activities is discussed in the following subsections.

Entering Interactive Command Mode

There are two ways to get to the interactive command-mode prompt:

• Press Ctrl+C in a Data Pump job that you started via expdp or impdp.

• Use the ATTACH parameter to attach to a currently running job

When you run a Data Pump job from the command line, you’re placed in the command-line mode.

You should see output displayed to your terminal as a job progresses. If you want to exit command-line mode, press Ctrl+C. This places you in the interactive command-interface mode. For an export job, the prompt is

Export>

Type in the HELP command to view the export interactive commands available (see Table 13–1 for a description):

Export> help

Type EXIT to leave interactive command mode:

Export> exit

You should now be at the operating-system prompt.

Table 13–1.

Export Interactive Commands

Command Description

ADD_FILE

Adds files to the export dump set.

CONTINUE_CLIENT

Continues with interactive client mode.

EXIT_CLIENT

Exits the client session and returns to the operating-system prompt. Leaves the current job running.

FILESIZE

Defines file size for any subsequently created dump files.

HELP

Displays interactive export commands.

KILL_JOB

Terminates the current job.

PARALLEL

Increases or decreases the degree of parallelism.

START_JOB

Restarts the attached job.

STATUS

Displays the status of the currently attached job.

STOP_JOB

Stops a job from processing (you can later restart it). Using the IMMEDIATE

[=IMMEDIATE]

parameter quickly stops the job, but there may be some incomplete tasks.

313

CHAPTER 13 ■ DATA PUMP

You can press Ctrl+C for either an export or import job. For an import job, the interactive command-mode prompt is

Import>

To view all commands available, type HELP:

Import> help

The interactive command-mode import commands are summarized in table 13–2.

Table 13–2.

Import Interactive Commands

Command Description

CONTINUE_CLIENT

Continues with interactive logging mode.

EXIT_CLIENT

Exits the client session and returns to the operating-system prompt.

Leaves the current job running.

HELP

Displays the available interactive commands.

KILL_JOB

Terminates the job it’s currently connected to in the client.

PARALLEL

Increases or decreases the degree of parallelism.

START_JOB

Restarts a previously stopped job. START_JOB=SKIP_CURRENT restarts the job and skips any operations that were active when the job was stopped.

STATUS

Specifies the frequency at which the job status is monitored. Default mode is 0; the client reports job status changes whenever available in this mode.

STOP_JOB [=IMMEDIATE]

Stops a job from processing (you can later restart it). Using the IMMEDIATE

parameter quickly stops the job, but there may be some incomplete tasks.

Type EXIT to leave the Data Pump status utility:

Import> exit

You should now be at the operating-system prompt.

Attaching to a Running Job

One powerful feature of Data Pump is that you can attach to a currently running job and view its progress and status. If you have DBA privileges, you can even attach to a job if you aren’t the owner. You can attach to either an import or an export job via the ATTACH parameter.

Before you attach to a job, you must first determine the Data Pump job name (and owner name if you’re not the owner of the job). Run the following SQL query to display currently running jobs: SQL> select owner_name, operation, job_name, state from dba_datapump_jobs; 314

CHAPTER 13 ■ DATA PUMP

Here’s some sample output:

OWNER_NAME OPERATION JOB_NAME STATE

--------------- --------------- -------------------- ---------------

DARL IMPORT SYS_IMPORT_SCHEMA_02 EXECUTING

DARL IMPORT SYS_IMPORT_SCHEMA_01 NOT RUNNING

In this example, you’re the owner of the job, so you use the ATTACH parameter without prepending the owner name to it. This is an import job, so you use the impdp command to attach to the job name SYS_IMPORT_SCHEMA_02:

$ impdp darl/engdev attach=sys_import_schema_02

If you aren’t the owner of the job, you attach to the job by specifying the owner name and the job name:

$ impdp system/foobar attach=darl.sys_import_schema_02

You should now see the Data Pump command-line prompt:

Import>

Type STATUS to view the status of the currently attached job:

Import> status

Stopping and Restarting a Job

If you have a currently running Data Pump job that you want to temporarily stop, you can do so by first attaching to the interactive command mode. You may want to stop a job to resolve space issues or performance issues and then, after resolving the issues, restart the job. This example attaches to an import job:

$ impdp darl/foo attach=sys_import_table_01

Now, stop the job using the STOP_JOB parameter:

Import> stop_job

You should see this output:

Are you sure you wish to stop this job ([yes]/no):

Type YES to proceed with stopping the job. You can also specify that the job be stopped immediately:

Import> stop_job=immediate

When you stop a job with the IMMEDIATE option, there may be some incomplete tasks associated with the job. To restart a job, attach to interactive command mode and issue the START_JOB command: Import> start_job

If you want to resume logging job output to your terminal, issue the CONTINUE_CLIENT command: Import> continue_client

315

CHAPTER 13 ■ DATA PUMP

Terminating a Data Pump Job

You can instruct Data Pump to permanently kill an export or import job. First, attach to the job in interactive command mode, and then issue the KILL_JOB command:

Import> kill_job

You should be prompted with the following:

Are you sure you wish to stop this job ([yes]/no):

Type YES to permanently kill the job. Data Pump unceremoniously kills the job and drops the associated status table from the user running the export or import.

Tips for Getting Started

This section describes some common Data Pump features that I regularly use. These techniques can assist you in minimizing command-line errors and help verify what was exported or what objects and metadata are imported.

Use a Parameter File

Instead of typing commands on the command line, in many situations it’s preferable to store the commands in a file and then reference the file when executing Data Pump export or import. Using parameter files makes tasks more repeatable and less prone to errors. You can place the commands in a file once and then reference that file multiple times.