unSpun (10 page)

A Military Duty to Lie

In the case of war, accurate information is especially hard to come by. Military commanders consider it their duty to deceive the enemy if that will win battles and save lives among their own troops. Sometimes that means deceiving the public as well, as in the 1982 Falklands War, when Britain sent a fleet to recapture these South Atlantic islands from Argentina. When an invasion seemed near, Sir Frank Cooper, an under secretary at the British Ministry of Defence, discouraged reporters from thinking there would be a Normandy-style operation with “the landing ships dashing up to the beaches and chaps storming out and lying on their tummies and wriggling up through barbed wire.” Relying on that, British reporters told the public that “hit and run” attacks were to be expected, rather than a major battle. Reporters in the United States and elsewhere followed suit, and were badly misledâas were the Argentine defenders. They were taken by surprise the following night when a full-scale amphibious operation commenced at points around an inlet called San Carlos Water on East Falkland Island. The roughly sixty Argentine defenders were overwhelmed, and soon at least 4,000 British troops were ashore. From that secure beachhead, they advanced on the island's major settlements and forced an Argentine surrender less than a month later.

Reporters complained bitterly about the way in which they had been manipulated. Sir Terence Lewin, chief of the British defense staff, responded: “I do not see it as deceiving the press or the public; I see it as deceiving the enemy. What I am trying to do is to win. Anything I can do to help me win is fair as far as I'm concerned, and I would have thought that that was what the Government and the public and the media would want, too, provided the outcome was the one we were all after.” That's the way military commanders have seen it since the time of the Greeks and the Trojans. The Chinese general Sun Tzu summed it up 2,500 years ago: “All warfare is based on deception.” We doubt that will change any time soon.

It is especially difficult to get the facts right in the chaos and confusion of war, as the Tonkin Gulf incident amply illustrates. The Prussian general Carl von Clausewitz famously observed in 1832 that leaders in battle operate in a kind of feeble twilight like “a fog or moonshine.” And because so much military information is classified, the public is in even worse shape when it comes to getting accurate information about war. Often the truth emerges only in histories written a generation or more after the event.

The Fog of War

The great uncertainty of all data in war is a peculiar difficulty, because all action must, to a certain extent, be planned in a mere twilight, which in addition not infrequentlyâlike the effect of a fog or moonshineâgives to things exaggerated dimensions and unnatural appearance. What this feeble light leaves indistinct to the sight, talent must discover, or must be left to chance.

âG

ENERAL

C

ARL VON

C

LAUSEWITZ

,

On War

(1832)

We can't say how history would have turned out had American citizens known the truth about the

Maine,

or the truth about what happened in the Tonkin Gulf, or the truth about the Iraqi “baby killers” in 1991, or the truth about Saddam Hussein's lack of chemical, biological, or nuclear weapons in 2003. In each case the United States had other reasons for war: the desire to grab pieces of Spain's doddering empire in 1898; the wish to evict an aggressor from Kuwait and its oilfields in 1991. Perhaps none of the wars would have been averted. But then again, had the public known the facts, war fever might well have run lower, and leaders might have acted differently. We can never know for sure. What we

do

know is that the Spanish-American War, the Vietnam War, and two Iraq wars were begun, at least in part, under false pretenses.

When war talk runs hot, keep an open mind and keep asking yourself, “I wonder how this will look when the history books are written?”

Fortunately, it's not as hard to get current, accurate information about other matters that bear on our well-being. Even in a world of spin, ordinary citizens can call up reliable sources of information quickly and easily on the Internet. Do you want more information on that miracle prescription medication you saw advertised on television? The full list of side effects is only a few keystrokes away at the Food and Drug Administration's website, and reputable consumer sites contain information about whether it works as well as advertised or is any better than cheaper generic drugs. At www.worstpills.org, for example, you can find strong criticisms of even FDA-approved medications by an aggressive consumer advocate, Dr. Sidney Wolfe. Does that tax-avoidance maneuver you're hearing about seem a bit fishy? The IRS has lots of information about tax scams that it would like to share with you. Has your uncle Bob sent you an e-mail with the subject line “You must read this,” which turns out to be a much-forwarded claim that mass marketers are about to run up charges on your cell phone with unwanted sales calls? You can debunk that easily at any of the several websites that specialize in puncturing urban myths, and get authoritative word directly from the Federal Trade Commission itself.

In this chapter, we've been stressing that facts are important. We now turn to how to tell which facts are most important, and how to tell the difference between evidence and random anecdotes. Later on we'll tell you how you can get those facts yourself, using some of the techniques we use every day at FactCheck.org.

Chapter 6

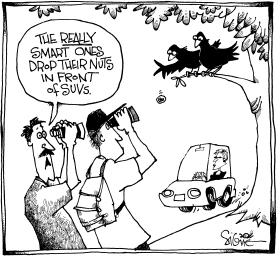

The Great Crow Fallacy

Finding the Best Evidence

T

ERRY

M

APLE WASN'T SURE, BUT HE THOUGHT HE MIGHT HAVE

seen a crow using cars to crack walnuts. He had spotted the crow dropping nuts on the pavement one day as he drove through Davis, California. Maple couldn't know that his curious observation would give rise to a twenty-year legend that would significantly elevate crows' status on the avian IQ scale. We tell the story here as a cautionary tale to those with a tendency to draw fast conclusions from limited evidence.

Maple, a psychology professor at the University of CaliforniaâDavis, published an article in 1974 describing the single crow and its behavior. The title was “Do Crows Use Automobiles as Nutcrackers?” Maple couldn't answer the question, and it wasn't even clear whether the crow he saw had managed to crack the nut it dropped: “I was, unfortunately, unable to return to the scene for a closer look,” he wrote. The professor correctly called his observation “an anecdote,” meaning an interesting story that suggested crows might use cars to crack walnuts, and that future research might settle the question.

Jump ahead three years, to a November morning in 1977. A biologist named David Grobecker observed a single crow dropping a palm fruit from its beak onto a busy residential street in Long Beach, California. The bird seemed to wait, perched on a lamppost, until a car ran over the fruit and broke it into edible fragments. Then it flew down to eat. This happened twice in the space of about twenty minutes. Grobecker and another biologist, Theodore Pietsch, published an article the following year whose title, “Crows Use Automobiles as Nutcrackers,” suggested they had answered the question posed by Maple. “This is indeed an ingenious adjustment to the intrusion of man's technology,” the authors concluded.

For nearly twenty years, others cited these two published accounts as evidence of exceptional intelligence in crows. Indeed, some crow fanciers remain convincedâlargely on the basis of these two anecdotesâthat crows have learned how to use passing cars to crack nuts. But it turns out that although crows are smart birds, they are almost certainly not

that

smart.

How do we know? Because we now have some real data, not single observations or anecdotes. There is a big difference, as the rest of this story illustrates.

The data come from a study published in

The Auk,

the journal of the American Ornithologists' Union, in 1997 by the biologist Daniel Cristol and three colleagues from the University of California. Cristol's study was based on more than a couple of random observations. He and his colleagues watched crows foraging for walnuts on the streets of Davis for a total of over twenty-five hours spread over fourteen days. Just as they had expected, they saw plenty of crows dropping walnuts on the street. Crows, seagulls, and some other birds often drop food onto hard surfaces to crack it open. An estimated 10,000 crows were roosting nearby, and 150 walnut trees lined the streets where the study was conducted. But did the crows deliberately drop walnuts in the path of oncoming cars? The scientists watched how the crows behaved when cars were approaching; then, soon after, they watched how crows behaved at the same places when cars were not approaching, during an equivalent time period.

What they found, after 400 separate observations, was that there was no real difference. In fact, crows were just slightly more likely to drop a walnut on the pavement when no car was approaching. The birds also were slightly more likely to fly away and leave a nut on the pavement in the absence of a car, contrary to what would be expected if the birds really expected cars to crack the nuts for them. Furthermore, the scientists noted that they frequently saw crows dropping walnuts on rooftops, on sidewalks, and in vacant parking lots, where there was no possibility of a car coming along. Not once during the study did a car crack even a single walnut dropped by a crow.

The authors concluded, reasonably enough: “Our observations suggest that crows merely are using the hard road surface to facilitate opening walnuts, and their interactions with cars are incidental.” The title of their article: “Crows Do Not Use Automobiles as Nutcrackers: Putting an Anecdote to the Test.” The anecdote flunked.

LESSON:

Don't Confuse Anecdotes with Data

O

NE OF OUR FAVORITE SAYINGSâVARIOUSLY ATTRIBUTED TO DIFFERENT

economistsâis “The plural of âanecdote' is not âdata.'” That means simply this: one or two interesting stories don't prove anything. They could be far from typical. In this case, it's fun to think that crows might be clever enough to learn such a neat trick as using human drivers to prepare their meals for them. It's also easy to see how spotting a few crows getting lucky can encourage even serious scientists to think the behavior might be deliberate. But we have to consider the term “anecdotal evidence” as something close to an oxymoron, a contradiction in terms.

Now, it's true that the crow debate continues. Millions of people saw a PBS documentary by David Attenborough that showed Japanese crows putting walnuts in a crosswalk and then returning to eat after passing cars had cracked them. That scene was inspired by an article in the

Japanese Journal of Ornithology

by a psychologist at Tohoku University. But the Japanese article wasn't based on a scientific study; it merely reported more anecdotes: “Because the [crows'] behavior was so sporadic, most observation was made when the author came across the behavior coincidentally on his commute to the campus.” That was two years before Cristol and his colleagues finally published their truly systematic study. So for us, the notion that crows deliberately use cars as nutcrackers has been debunked, until and unless better evidence comes along. Even Theodore Pietsch, who coauthored the 1978 article that said crows do use cars as nutcrackers, has changed his view. “When Grobecker and I wrote that paper so long ago, we did it on a whim, took about an hour to write it, and we were shocked that it was accepted for publication almost immediately, with no criticism at all from outside referees,” he told us. “I would definitely put much more credibility in a study supported by data rather than random observation.” So do we, and so should all of us.

Seeing versus Believing

Avoiding spin and getting a solid grip on hard facts requires not only an open mind and a willingness to consider all the evidence, it requires us to have some basic skills in telling good evidence from bad, and to recognize that mere assertion is not fact and that not all facts are good evidence. As counterintuitive as it may seem, the most basic lesson is that our own personal experience isn't necessarily very good evidence. It's natural to trust what we can see with our own eyes, what we can touch with our own hands and hear with our own ears. But our own experience can mislead us.

LESSON:

Remember the Blind Men and the Elephant

I

T IS A NATURAL HUMAN TENDENCY TO GIVE GREAT WEIGHT TO OUR

immediate experience, as the ancient fable of the blind men and the elephant should remind us. In the version written by the nineteenth-century American poet John Godfrey Saxe, six blind men feel different parts of the elephant and conclude variously that it is like a snake, a wall, a tree, a fan, a spear, or a rope. Then they argue. Saxe's poem concludes:

And so these men of Indostan, disputed loud and long, each in his own opinion, exceeding stiff and strong, Though each was partly in the right, and all were in the wrong!

So, oft in theologic wars, the disputants, I ween, tread on in utter ignorance of what each other mean, and prate about the elephant not one of them has seen!

Unless we want to “tread on in utter ignorance,” like the blind men debating about the elephant, we need to bear in mind that our personal experience seldom gives us a full picture. This is especially true when our experience is indirect, filtered by others.

Consider what happened during the Gulf War of 1991. During the forty-three-day air campaign, television viewers at home watched “smart bombs” homing in unerringly on their targets time after time. A nation that had lost more than 58,000 members of its armed forces in Vietnam a generation earlier now seemed able to fight a new kind of war, from the air, putting hardly any soldiers at risk.

Not surprisingly, military briefers were showing the public only their apparent successes, but no amount of skeptical questioning by reporters could undo the enormous impact of what viewers were seeing on their TV screens. Only long after the war did we learn that a lot of “smart” weapons missed their targets and that just 8 percent of the munitions dropped (measured by tonnage) were guided. Contrary to the picture presented on television, nine of ten bombs dropped were old-fashioned “dumb” ones. This was documented in a 1996 report by the General Accounting Office (which has since been renamed the Government Accountability Office). It wasn't until a decade after the 1991 war that weapons precision actually improved enough to match the false impression created by the selective use of video during Desert Storm. The lesson here is that sometimes what you don't know or haven't been told is

more

important than what you have seen with your own eyes. We humans have a natural tendency to overgeneralize from vivid examples.

The reason we should trust the GAO's report over the evidence offered by our own eyes is that the GAO had access to all the relevant data, including the Pentagon's bomb-damage assessment reports, and it systematically weighed and studied that mass of information. Also, the GAO is an arm of Congress with a reputation for even-handed evaluation and for casting a skeptical eye on the claims of agencies (such as the Pentagon) that seek taxpayer money for their programs. The GAO study relied on evidence; what Pentagon briefers showed Americans during the war was a collection of anecdotesâand carefully selected anecdotes at that. The public saw few if any “smart” bombs miss, when in fact they missed much of the time. War was made to seem less messy, less morally objectionable, than it really is.

The Great Fertilizer Scare

Without real data and hard evidence, it's easy to be led astray in all sorts of matters, especially when a dramatic story involving well-known figures captures our attention. The Great Fertilizer Scare of 1987 will illustrate. It began when a former San Francisco 49ers quarterback, Bob Waters, was diagnosed with amyotrophic lateral sclerosis, also known as Lou Gehrig's disease, and it was reported that two other players from the 1964 team also had ALS. Soon newspapers were carrying a story saying a common lawn fertilizer, Milorganite, which a groundskeeper recalled using on the 49ers practice field, was suspected as a cause.

Milorganite is sewage sludge, recycled by the Milwaukee Metropolitan Sewerage District. It is dried for forty minutes at between 840 and 1,200 degrees Fahrenheit, a process that kills bacteria and viruses, but it does contain tiny amounts of certain elements, including cadmium, which some researchers at the time suspected might be a cause of the disease. A Milwaukee newspaper dug up the fact that two former Milorganite plant workers also had died of ALS (out of 155 total deaths among workers, from all causes) and that 25 ALS patients in the Milwaukee area claimed to have been in contact with Milorganite.

Time

magazine and The Associated Press, among others, also ran stories citing a “possible” link between Milorganite and ALS.

But tragic as slow death from ALS certainly is, the story of the three celebrity football players is a bit like our tale of the great crow fallacy. An EPA epidemiologist who headed a team to study the matter, Patricia A. Murphy, said the ALS-Milorganite connection “has been blown up out of some groundskeeper's imagination.” She found no evidence of an increase in ALS in the Milwaukee area or Wisconsin as a whole, and concluded that “the anecdotal stories linking ALS and Milorganite are purely that, i.e., anecdotal with no basis in scientific fact.” Dr. Henry Anderson, Wisconsin's state environmental epidemiologist, agreed. He reported that scientific evidence against Milorganite was lacking and recommended that the state not give high priority to further study. The three 49ers might not even have been exposed to Milorganite. No records were ever found to support the groundskeeper's recollection. Even if the three players had been exposed to Milorganite, they appear to constitute what statisticians call a random cluster, meaning an unusually large grouping that occurs purely by chance. Epidemiologists are trained to look at the whole picture without being misled by such coincidental clusters. Sooner or later, if you toss a coin enough times, it will come up heads ten times in a row. The odds of it coming up heads on any given toss, however, are always 50â50. And the odds that Milorganite had anything to do with ALS are somewhere between very low and zero.

Mitch Snyder's “Meaningless” Numbers

Real evidence, unlike attention-grabbing anecdotes, is generated by systematic study. But not all studies are created equal, and some hardly deserve to be called studies at all. Consider a claim made in 1982 that more than 3 million Americans could be homeless. It was widely reported as an estimate that 3 million

were

homeless, and it was widely believed. Critics of the Reagan administration saw the figure as evidence that the Republican president's policies were creating a social calamity on a scale not seen since the days of the Dust Bowl and the Great Depression. Had 3 million been an accurate number, it would have meant that one American in every seventy-seven was living on the street.

In fact, the figure was close to an outright fabrication. The source was Mitch Snyder, a former advertising man and ex-convict (he served a federal prison term for grand theft, auto) who had turned himself into an advocate for the homeless and an unrelenting detractor of Ronald Reagan. His methods were hardly scientific. In 1980, he and others at the Community for Creative Non-Violence had called up one hundred local clergy, city officials, and others involved in aiding the homeless in twenty-five cities, asking them for a quick estimate of the number of homeless persons in their locality. But an estimate is not a count, and some estimates are more reliable than others. For one thing, CCNV rejected estimates that Snyder deemed too low. On this highly subjective basis, the group concluded that one percent of the entire U.S. population, or about 2.2 million people, were homeless at the time of the survey. Then, after a recession hit the economy, CCNV said, “We are convinced the number of homeless people in the United States could reach 3 million or more in 1983.” But that was nothing more than their guess piled on top of a dubious “average” of their cherry-picked estimates.

While testifying before the House Subcommittee on Housing on May 24, 1984, Snyder was challenged to support his estimate; he all but admitted that he had pulled the number from thin air. He said: “These numbers are in fact meaningless. We have tried to satisfy your gnawing curiosity for a number because we are Americans with western little minds that have to quantify everything in sight, whether we can or not.” But few reporters took note; instead, many repeated the “meaningless” 3 million estimate for years without conveying any sense of its spurious basis. More than seven years after Snyder confessed that his number was “meaningless,” for example, the CBS reporter John Roberts stated flatly that there were “more than three million homeless in America.”

LESSON:

Not All “Studies” Are Equal

S

OME QUESTIONS TO ASK WHEN THINKING ABOUT A DRAMATIC FACTUAL

claim:

⢠Who stands behind the information?

⢠Does the source have an ax to grind?

⢠What method did the source use to obtain the information?

⢠How old are the data?

⢠What assumptions did those collecting the information make?

⢠How much guesswork was involved?

Today, we can say with some confidence that homeless people in the United States number in the hundreds of thousands, not in the millions. This is still a lot of people without a home, but it's a fraction of what Snyder claimed.

In late March 2000, the U.S. Census Bureau

counted

170,706 persons in homeless shelters and soup lines and at a number of open-air locations, such as under the Brooklyn Bridge, where homeless persons were known to gather. The Census Bureau says that figure shouldn't be taken as a count of all the homelessâit concedes, for example, that it missed anyone who was not using a shelter on that early spring day, or who was sleeping in an open-air location other than those checked. But at least we can be certain that those 170,706 homeless persons were actually counted.

How many were missed is still open to question. Martha Burt, an expert at the Urban Institute who has studied homelessness for years, estimates that homeless people number no fewer than 444,000 and that the figure is probably closer to 842,000. Her estimates are projections based on an unprecedented, onetime Census Bureau survey of thousands of programs providing services to the homeless in seventy-six cities, suburbs, and rural areas. In October and November 1996, the bureau counted the persons being served at a random sample of those service locations and interviewed a random sampling of 4,207 clients to get additional information. The survey wasn't intended or designed to produce a national estimate of the homeless population, and the Census Bureau didn't attempt to derive one. But Burt made a few assumptions and calculated that nationally the number would have been 444,000 homeless adults and children using services on an average week in October and November, the months in which the Census Bureau conducted its head count. Burt also estimated that 842,000 homeless adults and children would have used services nationally on an average week in February, when the weather is much colder. Her estimates are just thatâestimatesâbut they extrapolate from solid data, on the basis of assumptions that are fully disclosed.

Something else gives us confidence in Burt's estimate that between 444,000 and 842,000 Americans are homeless: several other studies using different but still systematic methods have come up with figures that are generally in the same range. When different methods arrive at similar estimates, those estimates are more credible. We call this “convergent evidence.”