Data Mining (60 page)

Authors: Mehmed Kantardzic

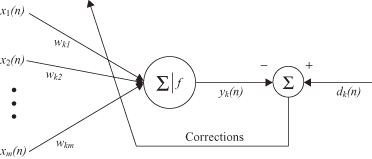

Figure 7.6.

Error-correction learning performed through weights adjustments.

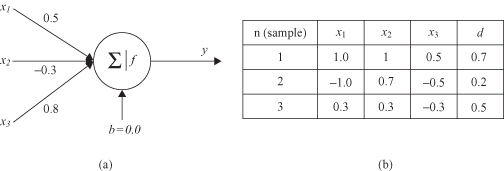

Let us analyze one simple example of the learning process performed on a single artificial neuron in Figure

7.7

a, with a set of the three training (or learning) examples given in Figure

7.7

b.

Figure 7.7.

Initialization of the error correction-learning process for a single neuron. (a) Artificial neuron with the feedback; (b) training data set for a learning process.

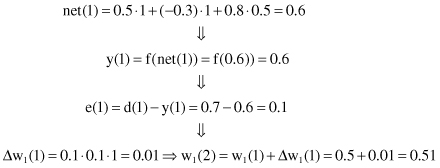

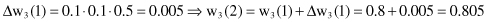

The process of adjusting the weight factors for a given neuron will be performed with the learning rate η = 0.1. The bias value for the neuron is equal 0, and the activation function is linear. The first iteration of a learning process, and only for the first training example, is performed with the following steps:

Similarly, it is possible to continue with the second and third examples (

n

= 2 and

n

= 3). The results of the learning corrections Δw together with new weight factors w are given in Table

7.2

.

TABLE 7.2.

Adjustment of Weight Factors with Training Examples in Figure

7.7

b

| Parameter | n = 2 | n = 3 |

| x 1 | −1 | 0.3 |

| x 2 | 0.7 | 0.3 |

| x 3 | −0.5 | −0.3 |

| y | −1.1555 | −0.18 |

| d | 0.2 | 0.5 |

| e | 1.3555 | 0.68 |

| Δw 1 ( n ) | −0.14 | 0.02 |

| Δw 2 ( n ) | 0.098 | 0.02 |

| Δw 3 ( n ) | −0.07 | −0.02 |

| w1( n + 1) | 0.37 | 0.39 |

| w2( n + 1) | −0.19 | −0.17 |

| w3( n + 1) | 0.735 | 0.715 |

Error-correction learning can be applied on much more complex ANN architecture, and its implementation is discussed in Section 7.5, where the basic principles of multilayer feedforward ANNs with backpropagation are introduced. This example only shows how weight factors change with every training (learning) sample. We gave the results only for the first iteration. The weight-correction process will continue either by using new training samples or by using the same data samples in the next iterations. As to when to finish the iterative process is defined by a special parameter or set of parameters called

stopping criteria

. A learning algorithm may have different stopping criteria, such as the maximum number of iterations, or the threshold level of the weight factor may change in two consecutive iterations. This parameter of learning is very important for final learning results and it will be discussed in later sections.

7.4 LEARNING TASKS USING ANNS

The choice of a particular learning algorithm is influenced by the learning task that an ANN is required to perform. We identify six basic learning tasks that apply to the use of different ANNs. These tasks are subtypes of general learning tasks introduced in Chapter 4.

7.4.1 Pattern Association

Association has been known to be a prominent feature of human memory since Aristotle, and all models of cognition use association in one form or another as the basic operation. Association takes one of two forms:

autoassociation

or

heteroassociation

. In

autoassociation

, an ANN is required to store a set of patterns by repeatedly presenting them to the network. The network is subsequently presented with a partial description or a distorted, noisy version of an original pattern, and the task is to retrieve and recall that particular pattern.

Heteroassociation

differs from

autoassociation

in that an arbitrary set of input patterns is paired with another arbitrary set of output patterns.

Autoassociation

involves the use of unsupervised learning, whereas

heteroassociation

learning is supervised. For both,

autoassociation

and

heteroassociation

, there are two main phases in the application of an ANN for pattern-association problems:

1.

the storage phase, which refers to the training of the network in accordance with given patterns, and

2.

the recall phase, which involves the retrieval of a memorized pattern in response to the presentation of a noisy or distorted version of a key pattern to the network.

7.4.2 Pattern Recognition

Pattern recognition is also a task that is performed much better by humans than by the most powerful computers. We receive data from the world around us via our senses and are able to recognize the source of the data. We are often able to do so almost immediately and with practically no effort. Humans perform pattern recognition through a learning process, so it is with ANNs.

Pattern recognition is formally defined as the process whereby a received pattern is assigned to one of a prescribed number of classes. An ANN performs pattern recognition by first undergoing a training session, during which the network is repeatedly presented a set of input patterns along with the category to which each particular pattern belongs. Later, in a testing phase, a new pattern is presented to the network that it has not seen before, but which belongs to the same population of patterns used during training. The network is able to identify the class of that particular pattern because of the information it has extracted from the training data. Graphically, patterns are represented by points in a multidimensional space. The entire space, which we call decision space, is divided into regions, each one of which is associated with a class. The decision boundaries are determined by the training process, and they are tested if a new, unclassified pattern is presented to the network. In essence, pattern recognition represents a standard classification task.

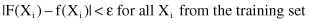

Function Approximation.

Consider a nonlinear input–output mapping described by the functional relationship

where the vector X is the input and Y is the output. The vector-value function f is assumed to be unknown. We are given the set of labeled examples {X

i

, Y

i

}, and we have to design an ANN that approximates the unknown function f with a function F that is very close to original function. Formally:

where ε is a small positive number. Provided that the size of the training set is large enough and the network is equipped with an adequate number of free parameters, the approximation error ε can be made small enough for the task. The approximation problem described here is a perfect candidate for supervised learning.

Control

Control is another learning task that can be done by an ANN. Control is applied to a process or a critical part in a system, which has to be maintained in a controlled condition. Consider the control system with feedback shown in Figure

7.8

.