Rise of the Robots: Technology and the Threat of a Jobless Future (12 page)

Read Rise of the Robots: Technology and the Threat of a Jobless Future Online

Authors: Martin Ford

The critical point here is that while many factors, such as the level of research and development effort and investment, or the presence of a favorable regulatory environment, can certainly have an impact on the relative position of technology S-curves, the most important factor by far is the set of physical laws that govern the sphere of technology in question. We don’t yet have a disruptive new aircraft technology and that is primarily due to the laws of physics and the limitations they imply relative to our current scientific and technical knowledge. If we hope to have another period of rapid innovation in a wide range of technological areas—perhaps something comparable to what occurred between approximately 1870 and 1960—we would need to find new S-curves in all these different areas. Obviously, that is likely to represent an enormous challenge.

There is one important reason for optimism, however, and that is the positive impact that accelerating information technology will

have on research and development in other fields. Computers have already been transformative in many areas. Sequencing the human genome would certainly have been impossible without advanced computing power. Simulation and computer-based design have greatly expanded the potential for experimentation with new ideas in a variety of research areas.

One information technology success story that has had a dramatic and personal impact on all of us has been the role of advanced computing power in oil and gas exploration. As the global supply of easily accessible oil and gas fields has declined, new techniques such as three-dimensional underground imaging have become indispensable tools for locating new reserves. Aramco, the Saudi national oil company, for example, maintains a massive computing center where powerful supercomputers are instrumental in maintaining the flow of oil. Many people might be surprised to learn that one of the most important ramifications of Moore’s Law has been the fact that, at least so far, world energy supplies have kept pace with surging demand.

The advent of the microprocessor has resulted in an astonishing increase in our overall ability to perform computations and manipulate information. Where once computers were massive, slow, expensive, and few in number, today they are cheap, powerful, and ubiquitous. If you were to multiply a single computer’s increase in computational power since 1960 by the number of new microprocessors that have appeared since then, the result would be nearly beyond reckoning. It seems impossible to imagine that such an immeasurable increase in our overall computing capacity won’t eventually have dramatic consequences in a variety of scientific and technical fields. Nonetheless, the primary determinant of the positions of the technology S-curves we’ll need to reach in order to have truly disruptive innovation is still the applicable laws of nature. Computational capability can’t change that reality, but it may well help researchers to bridge some of the gaps.

The economists who believe we have hit a technological plateau typically have deep faith in the relationship between the pace of

innovation and the realization of broad-based prosperity; the implication is that if we can just jump-start technological progress on a broad front, median incomes will once again begin increasing in real terms. I think there are good reasons to be concerned that this may not necessarily turn out to be the case. In order to understand why, let’s look at what makes information technology unique and the ways in which it will intertwine with innovations in other areas.

Why Information Technology Is Different

The relentless acceleration of computer hardware over decades suggests that we’ve somehow managed to remain on the steep part of the S-curve for far longer than has been possible in other spheres of technology. The reality, however, is that Moore’s Law has involved successfully climbing a staircase of cascading S-curves, each representing a specific semiconductor fabrication technology. For example, the lithographic process used to lay out integrated circuits was initially based on optical imaging techniques. When the size of individual device elements shrank to the point where the wavelength of visible light was too long to allow for further progress, the semiconductor industry moved on to X-ray lithography.

2

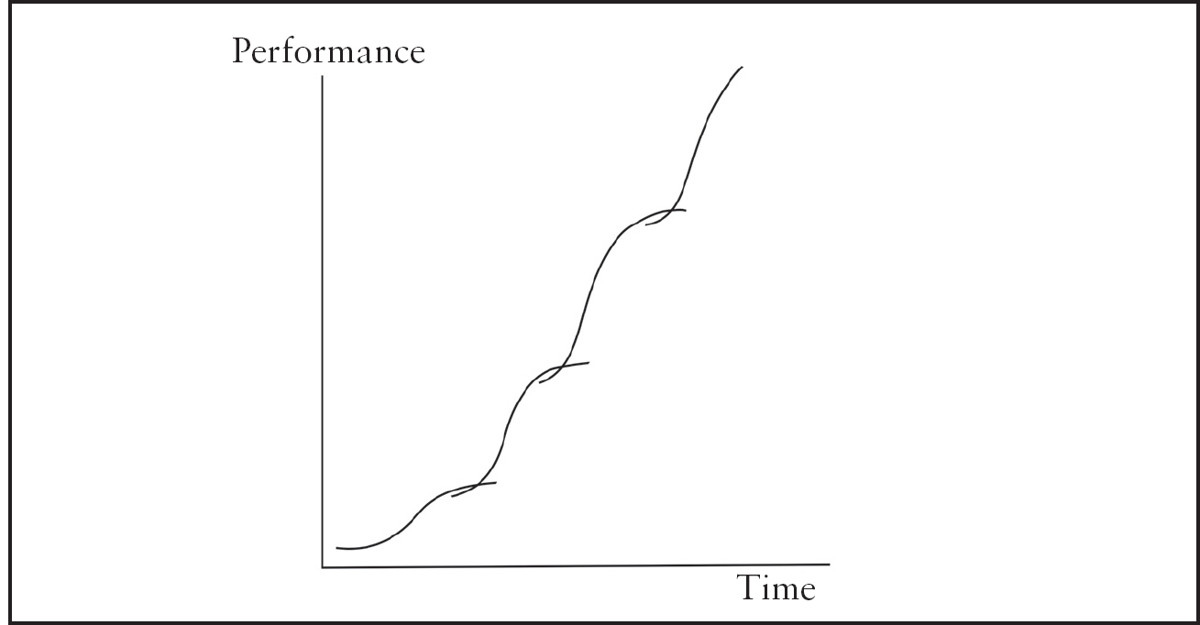

Figure 3.2

illustrates roughly what climbing a series of S-curves might look like.

One of the defining characteristics of information technology has been the relative accessibility of subsequent S-curves. The key to sustainable acceleration has not been so much that the fruit is low-hanging but, rather, that the tree is climbable. Climbing that tree has been a complex process that has been driven by intensive competition and has required enormous investment. There has also been substantial cooperation and planning. To help coordinate all these efforts, the industry publishes a massive document called the International Technology Roadmap for Semiconductors (ITRS), which essentially offers a detailed fifteen-year preview of how Moore’s Law is expected to unfold.

Figure 3.2. Moore’s Law as a Staircase of S-Curves

As things stand today, computer hardware may soon run into the same type of challenge that characterizes other areas of technology. In other words, reaching that next S-curve may eventually require a giant—and perhaps even unachievable—leap. The historical path followed by Moore’s Law has been to keep shrinking the size of transistors so that more and more circuitry can be packed onto a chip. By the early 2020s, the size of individual design elements on computer chips will be reduced to about five nanometers (billionths of a meter), and that is likely to be very close to the fundamental limit beyond which no further miniaturization is possible. There are, however, a number of alternate strategies that may allow progress to continue unabated, including three-dimensional chip design and exotic carbon-based materials.

3

*

Even if the advance of computer hardware capability were to plateau, there would remain a whole range of paths along which progress could continue. Information technology exists at the intersection of two different realities. Moore’s Law has dominated the realm of atoms, where innovation is a struggle to build faster devices and to minimize or find a way to dissipate the heat they generate. In contrast, the realm of bits is an abstract, frictionless place where algorithms, architecture (the conceptual design of computing systems), and applied mathematics govern the rate of progress. In some areas, algorithms have already advanced at a far faster rate than hardware. In a recent analysis, Martin Grötschel of the Zuse Institute in Berlin found that, using the computers and software that existed in 1982, it would have taken a full eighty-two years to solve a particularly complex production planning problem. As of 2003, the same problem could be solved in about a minute—an improvement by a factor of around 43 million. Computer hardware became about 1,000 times faster over the same period, which means that improvements in the algorithms used accounted for approximately a 43,000-fold increase in performance.

4

Not all software has improved so quickly. This is especially true of areas where software must interact directly with people. In an August 2013 interview with James Fallows of

The Atlantic,

Charles Simonyi, the computer scientist who oversaw the development of Microsoft Word and Excel, expressed the view that software has largely failed to leverage the advances that have occurred in hardware. When asked where the most potential for future improvement lies, Simonyi said: “The basic answer is that nobody would be doing routine, repetitive things anymore.”

5

There is also tremendous room for future progress through finding improved ways to interconnect vast numbers of inexpensive processors in massively parallel systems. Reworking current hardware device technology into entirely new theoretical designs could likewise produce giant leaps in computer power. Clear evidence that a sophisticated architectural design based on deeply complex interconnection

can produce astonishing computational capability is provided by what is, by far, the most powerful general computing machine in existence: the human brain. In creating the brain, evolution did not have the luxury of Moore’s Law. The “hardware” of a human brain is no faster than that of a mouse and is thousands to millions of times slower than a modern integrated circuit; the difference lies entirely in the sophistication of the design.

6

Indeed, the ultimate in computer capability—and perhaps machine intelligence—might be achieved if someday researchers are able to marry the speed of even today’s computer hardware with something approaching the level of design complexity you would find in the brain. Baby steps have already been taken in that direction: IBM released a cognitive computing chip—inspired by the human brain and aptly branded “SyNAPSE”—in 2011 and has since created a new programming language to accompany the hardware.

7

Beyond the relentless acceleration of hardware, and in many cases software, there are, I think, two other defining characteristics of information technology. The first is that IT has evolved into a true general-purpose technology. There are very few aspects of our daily lives, and especially of the operation of businesses and organizations of all sizes, that are not significantly influenced by or even highly dependent on information technology. Computers, networks, and the Internet are now irretrievably integrated into our economic, social, and financial systems. IT is everywhere, and it’s difficult to even imagine life without it.

Many observers have compared information technology to electricity, the other transformative general-purpose technology that came into widespread use in the first half of the twentieth century. Nicholas Carr makes an especially compelling argument for viewing IT as an electricity-like utility in his 2008 book

The Big Switch.

While many of these comparisons are apt, the truth is that electricity is a tough act to follow. Electrification transformed businesses, the overall economy, social institutions, and individual lives to an

astonishing degree—and it did so in ways that were overwhelmingly positive. It would probably be very difficult to find a single person in a developed country like the United States who did not eventually receive a major upgrade in his or her standard of living after the advent of electric power. The transformative impact of information technology is likely to be more nuanced and, for many people, less universally positive. The reason has to do with IT’s other signature characteristic: cognitive capability.

Information technology, to a degree that is unprecedented in the history of technological progress, encapsulates intelligence. Computers make decisions and solve problems. Computers are machines that can—in a very limited and specialized sense—

think.

No one would argue that today’s computers approach anything like human-level general intelligence. But that very often misses the point. Computers are getting dramatically better at performing specialized, routine, and predictable tasks, and it seems very likely that they will soon be poised to outperform many of the people now employed to do these things.

Progress in the human economy has resulted largely from occupational specialization, or as Adam Smith would say, “the division of labour.” One of the paradoxes of progress in the computer age is that as work becomes ever more specialized, it may, in many cases, also become more susceptible to automation. Many experts would say that, in terms of

general

intelligence, today’s best technology barely outperforms an insect. And yet, insects do not make a habit of landing jet aircraft, booking dinner reservations, or trading on Wall Street. Computers now do all these things, and they will soon begin to aggressively encroach in a great many other areas.

Comparative Advantage and Smart Machines

Economists who reject the idea that machines could someday make a large fraction of our workforce essentially unemployable often base

their argument on one of the biggest ideas in economics: the theory of comparative advantage.

8

To see how comparative advantage works, let’s consider two people. Jane is truly exceptional. After many years of intensive training and a record of nearly unmatched success, she is considered to be one of the world’s leading neurosurgeons. In her gap years between college and medical school, Jane enrolled in one of France’s best culinary institutes and is now also a gourmet cook of rarefied talent. Tom is more of an average guy. He is, however, a very good cook, and has been complimented many times on his skills. Still, he can’t really come close to matching what Jane can do in the kitchen. And it goes without saying that Tom wouldn’t be allowed anywhere near an operating room.

Given that Tom can’t compete with Jane as a cook, and certainly not as a surgeon, is there any way that the two could enter into an agreement that would make them both better off? Comparative advantage says “yes” and tells us that Jane could hire Tom as a cook. Why would she do that when she can get a better result by doing the cooking herself? The answer is that it would free up more of Jane’s time and energy for the one thing she is truly exceptional at (and the thing that brings in the most income): brain surgery.