The Computers of Star Trek (5 page)

Read The Computers of Star Trek Online

Authors: Lois H. Gresh

Further, we're told that each of the three main processing cores is redundantâthat is, they run in “parallel clock-sync with each other, providing 100% redundancy.” And that they do this at rates “significantly higher than the speed of light.” What could this statement possibly mean? It's one thing to say that data is transmitted at lightspeed. But it makes no sense to say that clock cycles per instruction run at lightspeed or that the clock cycle time is significantly higher than lightspeed. This is the same as claiming that a clock runs at 900,000 kilometers per second. Clocks don't run in kilometers, millimeters, or any other spatial unit. Machine speed is measured in operations per second, not in kilometers per second.

On the other hand, we can make the very vague statement that the faster a signal travels during a finite amount of time, the more operations the machine processes per second. If each signal represents one operation, and signals suddenly travel more quickly, then okay, the computer might process more instructions per time unit. But remember that Moore's Law (in one of its versions) says that computer speed doubles every 18 months. If we took an optical computer (where signals travel, say, at lightspeed) and replaced all its circuitry with FTL circuitry (where signals travel three times as fast), we might triple our computer's processing speed. Under Moore's Law, that's a gain of just over two years.

And having signals travel 900,000 kilometers per second adds very little speed if the circuit is microscopic. And wouldn't the system clock run backwards? Wouldn't information arrive before it was sent?âand so get sent back again in an endless sequence?

And...

As McCoy might say, “Damn it, Jim, we're computer scientists, not physicists!”

Let's continue our journey through the

Technical Manual

. The manual states that if one of the main processing cores in the primary hull fails, then the other assumes the total primary computing

load for the ship without interruption. Also that the main processing core in the engineering hull is a backup, in case the two primary units fail. So ... why do the holodeck simulations get interrupted in so many episodes? Why do the food replicators constantly go haywire? In

The Next Generation

episode “Cost of Living,” two hundred replicators break down.

e

Are all three main processing cores down? If so, how is

anything

running?

Technical Manual

. The manual states that if one of the main processing cores in the primary hull fails, then the other assumes the total primary computing

load for the ship without interruption. Also that the main processing core in the engineering hull is a backup, in case the two primary units fail. So ... why do the holodeck simulations get interrupted in so many episodes? Why do the food replicators constantly go haywire? In

The Next Generation

episode “Cost of Living,” two hundred replicators break down.

e

Are all three main processing cores down? If so, how is

anything

running?

Perhaps if we look more closely at the main processing core itself (

Figure 2.3

), as described in the manual, we'll come up with an answer.

Figure 2.3

), as described in the manual, we'll come up with an answer.

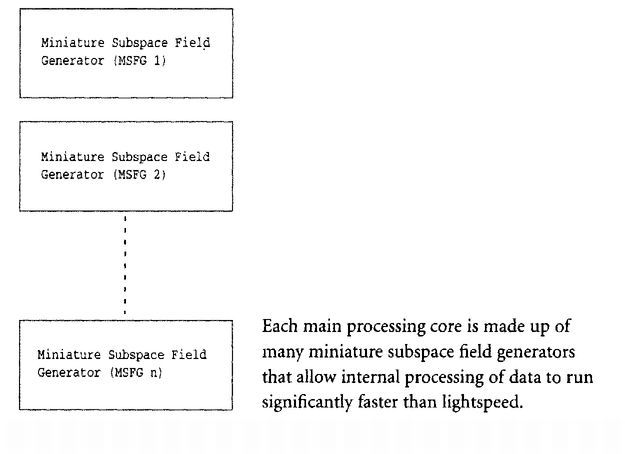

Each main processing core is made up of a series of miniature subspace field generators (MSFG). These create a symmetrical (nonpropulsive) field distortion of 3350 millicochranes within the FTL core elements. According to the manual, “This permits transmission and processing of optical data within the core at rates significantly higher than lightspeed.”

3

3

Further, we're told that a nanocochrane is a measure of subspace field stress and is equal to one billionth of a cochrane. These definitions are about warp speed. A cochrane is the amount of field stress needed to generate a speed of c, the speed of light. One cochrane = c, 2 cochranes = 2c, and so on.

Warp factor 1 = 1 cochrane

Warp factor 2 = 10 cochranes

Warp factor 3 = 39 cochranes

FIGURE 2.3

Main Processing Core

Main Processing Core

There's even a chart in the

Technical Manual

that shows “velocity in multiples of lightspeed” on the y-axis and “warp factor” on the x-axis, with “power usage in megajoules/cochrane” and “power usage approaches infinity” designated. We're told that warp 10 is impossible because at warp 10, speed would be “infinite.” (Never mind that the original series' ship sometimes exceeds warp 10. In “The Changeling” (

TOS

), the

Enterprise

hits warp 11.)

Technical Manual

that shows “velocity in multiples of lightspeed” on the y-axis and “warp factor” on the x-axis, with “power usage in megajoules/cochrane” and “power usage approaches infinity” designated. We're told that warp 10 is impossible because at warp 10, speed would be “infinite.” (Never mind that the original series' ship sometimes exceeds warp 10. In “The Changeling” (

TOS

), the

Enterprise

hits warp 11.)

So 3350 millicochranes = 3.35 cochranes = warp factor slightly above warp 1 (because 10 cochranes = warp factor 2). The implication is that the MSFGs allow internal processing of data within each main processing core to run significantly faster than lightspeed. Sorry, dear readers. Even if it meant something to say that a computer's processing speed is faster than light, this is still implausible. Just because FTL travel is possible for starships, that doesn't imply that machinery within an FTL field will operate at such speeds.

FTL signal transmission presumably affects redundancy, since the three cores transfer information from one to another at warp velocity. Anyone accessing one of the three computer cores would find the exact same data on each. Feeding information into one core is the same as feeding it into all three. This scenario is hard to believe. In emergency situations, if either of the main processing cores in the primary hull fails, the other would assume total primary computing load for the ship without interruption. As would the processing core in the engineering hull used for backup. The information in each would be exactly the same. In other words, the linked computers would achieve 100 percent redundancy. But only if we accept the notion that they can “operate at FTL speeds.”

If we don't, then it's impossible for the three computer cores to be 100 percent redundant. Though the machines might operate extremely fast, information transfer would still take nanoseconds or microseconds to complete. Not much time to us. But as we'll discuss in the chapter on navigation and battle, the delay might prove crucial to a starship.

Core ElementsT

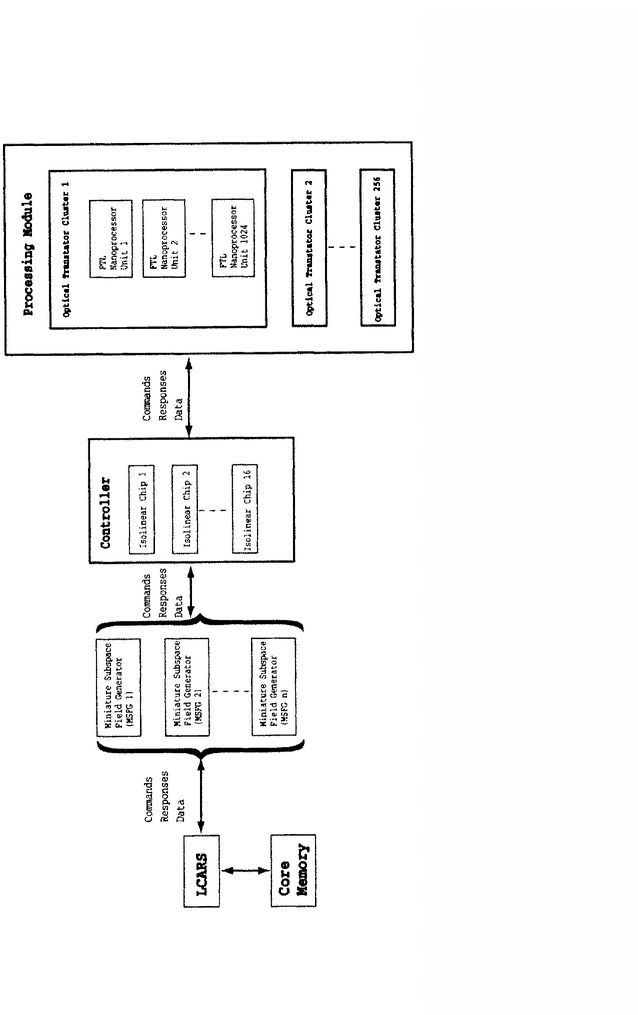

he main processing cores consist of individual processors, called core elements, that actually do the computingârunning programs, interpreting and carrying out instructions, calculating addresses in memory, and so on. According to the manual, “core elements are based on FTL nanoprocessor units arranged into optical transtator clusters of 1,024 segments. In turn, clusters are grouped into processing modules composed of 256 clusters controlled by a bank of sixteen isolinear chips:”

4

he main processing cores consist of individual processors, called core elements, that actually do the computingârunning programs, interpreting and carrying out instructions, calculating addresses in memory, and so on. According to the manual, “core elements are based on FTL nanoprocessor units arranged into optical transtator clusters of 1,024 segments. In turn, clusters are grouped into processing modules composed of 256 clusters controlled by a bank of sixteen isolinear chips:”

4

Let's try to translate this into English. Taking what we know about the ship's computer and combining it with the above description, we come up with

Figure 2.4

.

Figure 2.4

.

FIGURE 2.4

Core Elements

Core Elements

Since the manual devotes just two sentences to core elements, we have to guess what an FTL nanoprocessor is, what an optical transtator cluster is, what the segments are, and what the sixteen isolinear chips do.

Figure 2.4

shows the LCARS communicating with what we may call the controller through the miniature subspace field generators. The controller is the bank of sixteen isolinear chips. These chips may constitute a front-end parallel processing unit that interprets and consolidates commands and responses, and perhaps buffers data for faster transmission. (Though how data buffers can speed up transmission that's already going faster than light is beyond our comprehension.) Again, it looks as if the architecture of the

Enterprise

computer is a mishmash of mainframe architecture, supercomputer architecture, and a fantasy of FTL circuitry coursing through gigantic metal machinery.

Figure 2.4

shows the LCARS communicating with what we may call the controller through the miniature subspace field generators. The controller is the bank of sixteen isolinear chips. These chips may constitute a front-end parallel processing unit that interprets and consolidates commands and responses, and perhaps buffers data for faster transmission. (Though how data buffers can speed up transmission that's already going faster than light is beyond our comprehension.) Again, it looks as if the architecture of the

Enterprise

computer is a mishmash of mainframe architecture, supercomputer architecture, and a fantasy of FTL circuitry coursing through gigantic metal machinery.

Let's continue with the transmission of commands, responses, and data from the controller to and from the processing modules. We're told that each processing module has 256 optical transtator clusters, each containing 1,024 FTL nanoprocessor units.

Multiplying these numbers together, we deduce that each

Enterprise

processing module has 262,144 FTL nanoprocessor units. Remember that the ship has 40 processing modules per main processing core (see

Figure 2.2

) and that it has three main processing cores, for a total of 120 processing modules. Onboard the entire ship, therefore, we have 262,144 * 120 = 31,457,280 FTL nanoprocessor units.

Enterprise

processing module has 262,144 FTL nanoprocessor units. Remember that the ship has 40 processing modules per main processing core (see

Figure 2.2

) and that it has three main processing cores, for a total of 120 processing modules. Onboard the entire ship, therefore, we have 262,144 * 120 = 31,457,280 FTL nanoprocessor units.

That's a lot of processing power! Thirty-one million nanoprocessors certainly beats the 9,200 processors of Intel's 1997 supercomputer.

What's a Nanoprocessor?T

oday we use microprocessors, built from microtechnology. We measure parts in micrometers, or millionths of a meter. And small as they are, microprocessors are at least big enough to see.

oday we use microprocessors, built from microtechnology. We measure parts in micrometers, or millionths of a meter. And small as they are, microprocessors are at least big enough to see.

Today's computer scientists are forging into a new area, called nanotechnology. Nanoprocessors imply measurement in the billionths of a meter. In other words, molecular-based circuitry: invisible computers, and extremely fast.

Star Trek

gives us little information about the 31,457,280 nanoprocessors that are the ship's computer. This is material we'd love to see on future episodes. If the processors are microscopic, why does Geordi crawl through Jeffries tubes and use what appears to be a laser soldering gun to fix computer components? Why not a pair of wire cutters and some needlenose pliers? In short, why is manual tweaking necessary? A computer system this sophisticated should fix itself. A main thrust of nanotechnology is that the microscopic components operate as tiny factories. They repair themselves, build new components, and learn through artificial intelligence. They are much like the nanites in the episode “Evolution” (TNG). Speaking of which, it's most peculiar that people using nanotechnology computers would be so shocked by the discovery of the nanites.

gives us little information about the 31,457,280 nanoprocessors that are the ship's computer. This is material we'd love to see on future episodes. If the processors are microscopic, why does Geordi crawl through Jeffries tubes and use what appears to be a laser soldering gun to fix computer components? Why not a pair of wire cutters and some needlenose pliers? In short, why is manual tweaking necessary? A computer system this sophisticated should fix itself. A main thrust of nanotechnology is that the microscopic components operate as tiny factories. They repair themselves, build new components, and learn through artificial intelligence. They are much like the nanites in the episode “Evolution” (TNG). Speaking of which, it's most peculiar that people using nanotechnology computers would be so shocked by the discovery of the nanites.

Even Data's manual adjustments are pretty silly (though a lot of fun to watch). For example, in “The Schizoid Man” (

TNG

), Geordi checks Data's programming with a device that looks like a toaster. Certainly an android with self diagnostics and self repair, with a fully redundant and highly complex positronic neural netâwell, such an android would not require a huge toaster-like device as a repair tool!

TNG

), Geordi checks Data's programming with a device that looks like a toaster. Certainly an android with self diagnostics and self repair, with a fully redundant and highly complex positronic neural netâwell, such an android would not require a huge toaster-like device as a repair tool!

Also, how does Worf (in “A Fistful of Datas,”

TNG

) rig up wires between a communicator and a personal weapons shield? Is it

possible to connect wires from something that's invisible to a wireless communicator using a molecular-sized energy source?

MemoryTNG

) rig up wires between a communicator and a personal weapons shield? Is it

possible to connect wires from something that's invisible to a wireless communicator using a molecular-sized energy source?

A

t the end of the twentieth century, memory comes in several varieties. RAM, which can be accessed at the byte level, contains instructions and data used by the processors. Flash RAM also contains instructions and data but is read and written in blocks rather than bytes. Storing files, such as this chapter, is done using disk drives, floppies, zip disks, CDs, and tapes.

t the end of the twentieth century, memory comes in several varieties. RAM, which can be accessed at the byte level, contains instructions and data used by the processors. Flash RAM also contains instructions and data but is read and written in blocks rather than bytes. Storing files, such as this chapter, is done using disk drives, floppies, zip disks, CDs, and tapes.

The core memory consists of isolinear optical storage chips, which Trek defines as nanotech devices. Under the heading “Core Memory,” the

Technical Manual

says that “Memory storage for main core usage is provided by 2,048 dedicated modules of 144 isolinear optical storage chips.... Total storage capacity of each module is about 630,000 kiloquads, depending on software configuration.”

5

Figure 2.5

shows how we see core memory.

Technical Manual

says that “Memory storage for main core usage is provided by 2,048 dedicated modules of 144 isolinear optical storage chips.... Total storage capacity of each module is about 630,000 kiloquads, depending on software configuration.”

5

Figure 2.5

shows how we see core memory.

Oddly enough, no one on

Star Trek

ever mentions disk space, which is where files are actually stored. If core memory really means disk space and not RAM, then where's the RAM? The manual explicitly references “memory access” to and from the LCARS when discussing kiloquads. In today's world of computers, memory buses do “memory access” to memory chips, or RAM, not to hard drives.

Star Trek

ever mentions disk space, which is where files are actually stored. If core memory really means disk space and not RAM, then where's the RAM? The manual explicitly references “memory access” to and from the LCARS when discussing kiloquads. In today's world of computers, memory buses do “memory access” to memory chips, or RAM, not to hard drives.

The same manual defines the isolinear optical chips as the “primary software and data storage medium.” This phrase implies hard drive space. But then, in the next sentence, the manual refers to how the chips represent many advances over the earlier “crystal memory cards.” This sentence implies RAM. Because

Trek

people use the isolinear optical chips in tricorders and personal access display devices (PADDs) for “information transport,” it sounds as if the chips are the future version of today's floppy disks, zip disks, or CDs. A footnote in the

Technical Manual

states that the isolinear optical chips reflect “the original âmicrotape' data cartridges used in the original series.” Which also implies that the chips are descendants of floppies or zips or Jazz disks.

Trek

people use the isolinear optical chips in tricorders and personal access display devices (PADDs) for “information transport,” it sounds as if the chips are the future version of today's floppy disks, zip disks, or CDs. A footnote in the

Technical Manual

states that the isolinear optical chips reflect “the original âmicrotape' data cartridges used in the original series.” Which also implies that the chips are descendants of floppies or zips or Jazz disks.

Other books

Tomorrow by Nichole Severn

Awakened by a Demoness by Heaton, Felicity

One for the Road by Tony Horwitz

Malice On The Moors by Graham Thomas

Accordance by Shelly Crane

The Voyage of the Golden Handshake by Terry Waite

The Lone Alpha Unleashed: A Big Girl Meets Bad Wolf Romance by Molly Prince

Birthright: Battle for the Confederation- Pursuit by Ryan Krauter

B00BSH8JUC EBOK by Cohen, Celia

The Foundling's War by Michel Déon