The Design of Everyday Things (47 page)

Read The Design of Everyday Things Online

Authors: Don Norman

The role of writing in civilization has changed over its five thousand years of existence. Today, writing has become increasingly common, although increasingly as short, informal messages. We now communicate using a wide variety of media: voice, video, handwriting, and typing, sometimes with all ten fingers, sometimes just with the thumbs, and sometimes by gestures. Over time, the ways by which we interact and communicate change with technology. But because the fundamental psychology of human beings will remain unchanged, the design rules in this book will still apply.

Of course, it isn't just communication and writing that has changed. Technological change has impacted every sphere of our lives, from the way education is conducted, to medicine, foods, clothing, and transportation. We now can manufacture things at home, using 3-D printers. We can play games with partners around the world. Cars are capable of driving themselves, and their engines have changed from internal combustion to an assortment of

pure electric and hybrids. Name an industry or an activity and if it hasn't already been transformed by new technologies, it will be.

Technology is a powerful driver for change. Sometimes for the better, sometimes for the worse. Sometimes to fulfill important needs, and sometimes simply because the technology makes the change possible.

How Long Does It Take to Introduce a New Product?

How long does it take for an idea to become a product? And after that, how long before the product becomes a long-lasting success? Inventors and founders of startup companies like to think the interval from idea to success is a single process, with the total measured in months. In fact, it is multiple processes, where the total time is measured in decades, sometimes centuries.

Technology changes rapidly, but people and culture change slowly. Change is, therefore, simultaneously rapid and slow. It can take months to go from invention to product, but then decadesâsometimes many decadesâfor the product to get accepted. Older products linger on long after they should have become obsolete, long after they should have disappeared. Much of daily life is dictated by conventions that are centuries old, that no longer make any sense, and whose origins have been forgotten by all except the historian.

Even our most modern technologies follow this time cycle: fast to be invented, slow to be accepted, even slower to fade away and die. In the early 2000s, the commercial introduction of gestural control for cell phones, tablets, and computers radically transformed the way we interacted with our devices. Whereas all previous electronic devices had numerous knobs and buttons on the outside, physical keyboards, and ways of calling up numerous menus of commands, scrolling through them, and selecting the desired command, the new devices eliminated almost all physical controls and menus.

Was the development of tablets controlled by gestures revolutionary? To most people, yes, but not to technologists.

Touch-sensitive displays that could detect the positions of simultaneous finger presses (even if by multiple people) had been in the research laboratories for almost thirty years (these are called multitouch displays). The first devices were developed by the University of Toronto in the early 1980s. Mitsubishi developed a product that it sold to design schools and research laboratories, in which many of today's gestures and techniques were being explored. Why did it take so long for these

multitouch devices to become successful products? Because it took decades to transform the research technology into components that were inexpensive and reliable enough for everyday products. Numerous small companies tried to manufacture screens, but the first devices that could handle multiple touches were either very expensive or unreliable.

There is another problem: the general conservatism of large companies. Most radical ideas fail: large companies are not tolerant of failure. Small companies can jump in with new, exciting ideas because if they fail, well, the cost is relatively low. In the world of high technology, many people get new ideas, gather together a few friends and early risk-seeking employees, and start a new company to exploit their visions. Most of these companies fail. Only a few will be successful, either by growing into a larger company or by being purchased by a large company.

You may be surprised by the large percentage of failures, but that is only because they are not publicized: we only hear about the tiny few that become successful. Most startup companies fail, but failure in the high-tech world of California is not considered bad. In fact, it is considered a badge of honor, for it means that the company saw a future potential, took the risk, and tried. Even though the company failed, the employees learned lessons that make their next attempt more likely to succeed. Failure can occur for many reasons: perhaps the marketplace is not ready; perhaps the technology is not ready for commercialization; perhaps the company runs out of money before it can gain traction.

When one early startup company, Fingerworks, was struggling to develop an affordable, reliable touch surface that distinguished

among multiple fingers, it almost quit because it was about to run out of money. Apple however, anxious to get into this market, bought Fingerworks. When it became part of Apple, its financial needs were met and Fingerworks technology became the driving force behind Apple's new products. Today, devices controlled by gestures are everywhere, so this type of interaction seems natural and obvious, but at the time, it was neither natural nor obvious. It took almost three decades from the invention of multitouch before companies were able to manufacture the technology with the required robustness, versatility, and very low cost necessary for the idea to be deployed in the home consumer market. Ideas take a long time to traverse the distance from conception to successful product.

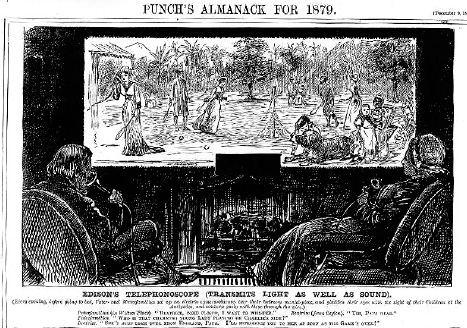

VIDEOPHONE: CONCEIVED IN 1879âSTILL NOT HERE

The Wikipedia article on videophones, from which

Figure 7.3

was taken, said: “George du Maurier's cartoon of âan electric camera-obscura' is often cited as an early prediction of television and also anticipated the videophone, in wide screen formats and flat screens.” Although the title of the drawing gives credit to Thomas Edison, he had nothing to do with this. This is sometimes called

Stigler's law: the names of famous people often get attached to ideas even though they had nothing to do with them.

The world of product design offers many examples of Stigler's law. Products are thought to be the invention of the company that most successfully capitalized upon the idea, not the company that originated it. In the world of products, original ideas are the easy part. Actually producing the idea as a successful product is what is hard. Consider the idea of a video conversation. Thinking of the idea was so easy that, as we see in

Figure 7.3

,

Punch

magazine illustrator du Maurier could draw a picture of what it might look like only two years after the telephone was invented. The fact that he could do this probably meant that the idea was already circulating. By the late 1890s, Alexander Graham Bell had thought through a number of the design issues. But the wonderful scenario illustrated

by du Maurier has still not become reality, one and one-half centuries later. Today, the videophone is barely getting established as a means of everyday communication.

FIGURE 7.3

Â

Predicting the Future: The Videophone in 1879.

The caption reads: “Edison's Telephonoscope (transmits light as well as sound). (

Every evening, before going to bed, Pater- and Materfamilias set up an electric camera-obscura over their bedroom mantel-piece, and gladden their eyes with the sight of their children at the Antipodes, and converse gaily with them through the wire.”

) (Published in the December 9, 1878, issue of

Punch

magazine. From

“Telephonoscope,” Wikipedia.)

It is extremely difficult to develop all the details required to ensure that a new idea works, to say nothing of finding components that can be manufactured in sufficient quantity, reliability, and affordability. With a brand-new concept, it can take decades before the public will endorse it. Inventors often believe their new ideas will revolutionize the world in months, but reality is harsher. Most new inventions fail, and even the few that succeed take decades to do so. Yes, even the ones we consider “fast.” Most of the time, the technology is unnoticed by the public as it circulates around the research laboratories of the world or is tried by a few unsuccessful startup companies or adventurous early adopters.

Ideas that are too early often fail, even if eventually others introduce them successfully. I've seen this happen several times. When I first joined Apple, I watched as it released one of the very first commercial digital cameras: the Apple QuickTake. It failed. Probably you are unaware that Apple ever made cameras. It failed because the technology was limited, the price high, and the world simply wasn't ready to dismiss film and chemical processing of photographs. I was an adviser to a startup company that produced the world's first digital picture frame. It failed. Once again, the technology didn't quite support it and the product was relatively expensive. Obviously today, digital cameras and digital photo frames are extremely successful products, but neither Apple nor the startup I worked with are part of the story.

Even as digital cameras started to gain a foothold in photography, it took several decades before they displaced film for still photographs. It is taking even longer to replace film-based movies with those produced on digital cameras. As I write this, only a small number of films are made digitally, and only a small number of theaters project digitally. How long has the effort been going on? It is difficult to determine when the effort stated, but it has been a very long time. It took decades for high-definition television to replace the standard, very poor resolution of the previous generation (NTSC in the United States and PAL and SECAM elsewhere). Why so long to get to a far better picture, along with far better sound? People are very conservative. Broadcasting stations would have to replace all their equipment. Homeowners would need new sets. Overall, the only people who push for changes of this sort are the technology enthusiasts and the equipment manufacturers. A bitter fight between the television broadcasters and the computer industry, each of which wanted different standards, also delayed adoption (described in

Chapter 6

).

In the case of the videophone shown in

Figure 7.3

, the illustration is wonderful but the details are strangely lacking. Where would the video camera have to be located to display that wonderful panorama of the children playing? Notice that “Pater- and Materfamilias” are sitting in the dark (because the video image is

projected by a “camera obscura,” which has a very weak output). Where is the video camera that films the parents, and if they sit in the dark, how can they be visible? It is also interesting that although the video quality looks even better than we could achieve today, sound is still being picked up by trumpet-shaped telephones whose users need to hold the speaking tube to their face and talk (probably loudly). Thinking of the concept of a video connection was relatively easy. Thinking through the details has been very difficult, and then being able to build it and put it into practiceâwell, it is now considerably over a century since that picture was drawn and we are just barely able to fulfill that dream. Barely.

It took forty years for the first working videophones to be created (in the 1920s), then another ten years before the first product (in the mid-1930s, in Germany), which failed. The United States didn't try commercial videophone service until the 1960s, thirty years after Germany; that service also failed. All sorts of ideas have been tried including dedicated videophone instruments, devices using the home television set, video conferencing with home personal computers, special video-conferencing rooms in universities and companies, and small video telephones, some of which might be worn on the wrist. It took until the start of the twenty-first century for usage to pick up.

Video conferencing finally started to become common in the early 2010s. Extremely expensive videoconferencing suites have been set up in businesses and universities. The best commercial systems make it seem as if you are in the same room with the distant participants, using high-quality transmission of images and multiple, large monitors to display life-size images of people sitting across the table (one company, Cisco, even sells the table). This is 140 years from the first published conception, 90 years since the first practical demonstration, and 80 years since the first commercial release. Moreover, the cost, both for the equipment at each location and for the data-transmission charges, are much higher than the average person or business can afford: right now they are mostly used in corporate offices. Many people today do engage in videoconferencing from their smart display devices,

but the experience is not nearly as good as provided by the best commercial facilities. Nobody would confuse these experiences with being in the same room as the participants, something that the highest-quality commercial facilities aspire to (with remarkable success).