The Information (39 page)

That evening Shannon took the floor. Never mind meaning, he said. He announced that, even though his topic was the redundancy of written English, he was not going to be interested in

meaning

at all.

He was talking about information as something transmitted from one point to another: “It might, for example, be a random sequence of digits, or it might be information for a guided missile or a television signal.”

♦

What mattered was that he was going to represent the information source as a statistical process, generating messages with varying probabilities. He showed them the sample text strings he had used in

The Mathematical Theory of Communication

—which few of them had read—and described his “prediction experiment,” in which the subject guesses

text letter by letter. He told them that English has a specific

entropy

, a quantity correlated with redundancy, and that he could use these experiments to compute the number. His listeners were fascinated—Wiener, in particular, thinking of his own “prediction theory.”

“My method has some parallelisms to this,” Wiener interrupted. “Excuse me for interrupting.”

There was a difference in emphasis between Shannon and Wiener. For Wiener, entropy was a measure of disorder; for Shannon, of uncertainty. Fundamentally, as they were realizing, these were the same. The more inherent order exists in a sample of English text—order in the form of statistical patterns, known consciously or unconsciously to speakers of the language—the more predictability there is, and in Shannon’s terms, the less information is conveyed by each subsequent letter. When the subject guesses the next letter with confidence, it is redundant, and the arrival of the letter contributes no new information. Information is surprise.

The others brimmed with questions about different languages, different prose styles, ideographic writing, and phonemes. One psychologist asked whether newspaper writing would look different, statistically, from the work of James Joyce. Leonard Savage, a statistician who worked with von Neumann, asked how Shannon chose a book for his test: at random?

“I just walked over to the shelf and chose one.”

“I wouldn’t call that random, would you?” said Savage. “There is a danger that the book might be about engineering.”

♦

Shannon did not tell them that in point of fact it had been a detective novel.

Someone else wanted to know if Shannon could say whether baby talk would be more or less predictable than the speech of an adult.

“I think more predictable,” he replied, “if you are familiar with the baby.”

English is actually many different languages—as many, perhaps, as there are English speakers—each with different statistics. It also spawns artificial dialects: the language of symbolic logic, with its restricted and precise alphabet, and the language one questioner called “Airplanese,” employed by control towers and pilots. And language is in constant flux.

Heinz von Foerster, a young physicist from Vienna and an early acolyte of Wittgenstein, wondered how the degree of redundancy in a language might change as the language evolved, and especially in the transition from oral to written culture.

Von Foerster, like Margaret Mead and others, felt uncomfortable with the notion of information without meaning. “I wanted to call the whole of what they called information theory

signal

theory,” he said later, “because information was not yet there. There were ‘

beep beeps

’ but that was all, no information. The moment one transforms that set of signals into other signals our brain can make an understanding of,

then

information is born—it’s not in the beeps.”

♦

But he found himself thinking of the essence of language, its history in the mind and in the culture, in a new way. At first, he pointed out, no one is conscious of letters, or phonemes, as basic units of a language.

I’m thinking of the old Maya texts, the hieroglyphics of the Egyptians or the Sumerian tables of the first period. During the development of writing it takes some considerable time—or an accident—to recognize that a language can be split into smaller units than words, e.g., syllables or letters. I have the feeling that there is a feedback between writing and speaking.

♦

The discussion changed his mind about the centrality of information. He added an epigrammatic note to his transcript of the eighth conference: “Information can be considered as order wrenched from disorder.”

♦

Hard as Shannon tried to keep his listeners focused on his pure, meaning-free definition of information, this was a group that would not steer clear of semantic entanglements. They quickly grasped Shannon’s essential ideas, and they speculated far afield. “If we could agree to define as information anything which changes probabilities or reduces uncertainties,” remarked Alex Bavelas, a social psychologist, “changes in emotional security could be seen quite easily in this light.” What about gestures or facial expressions, pats on the back or winks across

the table? As the psychologists absorbed this artificial way of thinking about signals and the brain, their whole discipline stood on the brink of a radical transformation.

Ralph Gerard, the neuroscientist, was reminded of a story. A stranger is at a party of people who know one another well. One says, “72,” and everyone laughs. Another says, “29,” and the party roars. The stranger asks what is going on.

His neighbor said, “We have many jokes and we have told them so often that now we just use a number.” The guest thought he’d try it, and after a few words said, “63.” The response was feeble. “What’s the matter, isn’t this a joke?”

“Oh, yes, that is one of our very best jokes, but you did not tell it well.”

♦

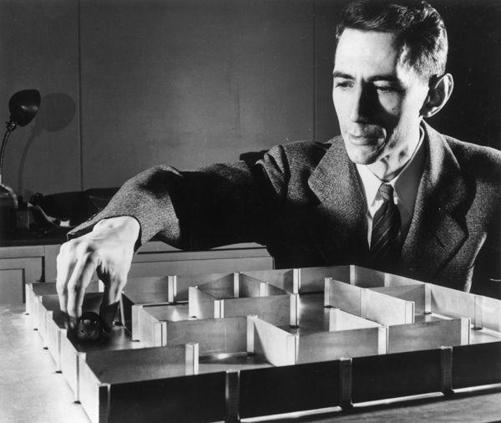

The next year Shannon returned with a robot. It was not a very clever robot, nor lifelike in appearance, but it impressed the cybernetics group. It solved mazes. They called it Shannon’s rat.

He wheeled out a cabinet with a five-by-five grid on its top panel. Partitions could be placed around and between any of the twenty-five squares to make mazes in different configurations. A pin could be placed in any square to serve as the goal, and moving around the maze was a sensing rod driven by a pair of little motors, one for east-west and one for north-south. Under the hood lay an array of electrical relays, about seventy-five of them, interconnected, switching on and off to form the robot’s “memory.” Shannon flipped the switch to power it up.

“When the machine was turned off,” he said, “the relays essentially forgot everything they knew, so that they are now starting afresh, with no knowledge of the maze.” His listeners were rapt. “You see the finger now exploring the maze, hunting for the goal. When it reaches the center of a square, the machine makes a new decision as to the next direction to try.”

♦

When the rod hit a partition, the motors reversed and the

relays recorded the event. The machine made each “decision” based on its previous “knowledge”—it was impossible to avoid these psychological words—according to a strategy Shannon had designed. It wandered about the space by trial and error, turning down blind alleys and bumping into walls. Finally, as they all watched, the rat found the goal, a bell rang, a lightbulb flashed on, and the motors stopped.

Then Shannon put the rat back at the starting point for a new run. This time it went directly to the goal without making any wrong turns or hitting any partitions. It had “learned.” Placed in other, unexplored parts of the maze, it would revert to trial and error until, eventually, “it builds up a complete pattern of information and is able to reach the goal directly from any point.”

♦

To carry out the exploring and goal-seeking strategy, the machine had to store one piece of information for each square it visited: namely, the direction by which it last left the square. There were only four possibilities—north, west, south, east—so, as Shannon carefully explained, two relays were assigned as memory for each square. Two relays meant two bits of information, enough for a choice among four alternatives, because there were four possible states: off-off, off-on, on-off, and on-on.

Next Shannon rearranged the partitions so that the old solution would no longer work. The machine would then “fumble around” till it found a new solution. Sometimes, however, a particularly awkward combination of previous memory and a new maze would put the machine in an endless loop. He showed them: “When it arrives at A, it remembers that the old solution said to go to B, and so it goes around the circle, A, B, C, D, A, B, C, D. It has established a vicious circle, or a singing condition.”

♦

“A neurosis!” said Ralph Gerard.

Shannon added “an antineurotic circuit”: a counter, set to break out of the loop when the machine repeated the same sequence six times. Leonard Savage saw that this was a bit of a cheat. “It doesn’t have any way to recognize that it is ‘psycho’—it just recognizes that it has been going too long?” he asked. Shannon agreed.

“It is all too human,” remarked Lawrence K. Frank.

“George Orwell should have seen this,” said Henry Brosin, a psychiatrist.

A peculiarity of the way Shannon had organized the machine’s memory—associating a single direction with each square—was that the path could not be reversed. Having reached the goal, the machine did not “know” how to return to its origin. The knowledge, such as it was, emerged from what Shannon called the vector field, the totality of the twenty-five directional vectors. “You can’t say where the sensing finger came from by studying the memory,” he explained.

“Like a man who knows the town,” said McCulloch, “so he can go from any place to any other place, but doesn’t always remember how he went.”

♦

Shannon’s rat was kin to Babbage’s silver dancer and the metal swans and fishes of Merlin’s Mechanical Museum: automata performing a simulation of life. They never failed to amaze and entertain. The dawn of the information age brought a whole new generation of synthetic

mice, beetles, and turtles, made with vacuum tubes and then transistors. They were crude, almost trivial, by the standards of just a few years later. In the case of the rat, the creature’s total memory amounted to seventy-five bits. Yet Shannon could fairly claim that it solved a problem by trial and error; retained the solution and repeated it without the errors; integrated new information from further experience; and “forgot” the solution when circumstances changed. The machine was not only imitating lifelike behavior; it was performing functions previously reserved for brains.

One critic, Dennis Gabor, a Hungarian electrical engineer who later won the Nobel Prize for inventing holography, complained, “In reality it is the maze which remembers, not the mouse.”

♦

This was true up to a point. After all, there was no mouse. The electrical relays could have been placed anywhere, and they held the memory. They became, in effect, a mental model of a maze—a

theory

of a maze.

The postwar United States was hardly the only place where biologists and neuroscientists were suddenly making common cause with mathematicians and electrical engineers—though Americans sometimes talked as though it was. Wiener, who recounted his travels to other countries at some length in his introduction to

Cybernetics

, wrote dismissively that in England he had found researchers to be “well-informed” but that not much progress had been made “in unifying the subject and in pulling the various threads of research together.”

♦

New cadres of British scientists began coalescing in response to information theory and cybernetics in 1949—mostly young, with fresh experience in code breaking, radar, and gun control. One of their ideas was to form a dining club in the English fashion—“limited membership and a post-prandial situation,” proposed John Bates, a pioneer in electroencephalography. This required considerable discussion of names, membership rules, venues, and emblems. Bates wanted electrically inclined biologists and biologically oriented

engineers and suggested “about fifteen people who had Wiener’s ideas before Wiener’s book appeared.”

♦

They met for the first time in the basement of the National Hospital for Nervous Diseases, in Bloomsbury, and decided to call themselves the Ratio Club—a name meaning whatever anyone wanted. (Their chroniclers Philip Husbands and Owen Holland, who interviewed many of the surviving members, report that half pronounced it RAY-she-oh and half RAT-ee-oh.

♦

) For their first meeting they invited Warren McCulloch.

They talked not just about understanding brains but “designing” them. A psychiatrist, W. Ross Ashby, announced that he was working on the idea that “a brain consisting of randomly connected impressional synapses would assume the required degree of orderliness as a result of experience”

♦

—in other words, that the mind is a self-organizing dynamical system. Others wanted to talk about pattern recognition, about noise in the nervous system, about robot chess and the possibility of mechanical self-awareness. McCulloch put it this way: “Think of the brain as a telegraphic relay, which, tripped by a signal, emits another signal.” Relays had come a long way since Morse’s time. “Of the molecular events of brains these signals are the atoms. Each goes or does not go.” The fundamental unit is a choice, and it is binary. “It is the least event that can be true or false.”

♦