Read The Language Instinct: How the Mind Creates Language Online

Authors: Steven Pinker

The Language Instinct: How the Mind Creates Language (12 page)

As Alice said, “Somehow it seems to fill my head with ideas—only I don’t exactly know what they are!” But though common sense and common knowledge are of no help in understanding these passages, English speakers recognize that they are grammatical, and their mental rules allow them to extract precise, though abstract, frameworks of meaning. Alice deduced, “

Somebody

killed

something

that’s clear, at any rate—.” And after reading Chomsky’s entry in

Bartlett’s

, anyone can answer questions like “What slept? How? Did one thing sleep, or several? What kind of ideas were they?”

How might the combinatorial grammar underlying human language work? The most straightforward way to combine words in order is explained in Michael Frayn’s novel

The Tin Men

. The protagonist, Goldwasser, is an engineer working at an institute for automation. He must devise a computer system that generates the standard kinds of stories found in the daily papers, like “Paralyzed Girl Determined to Dance Again.” Here he is hand-testing a program that composes stories about royal occasions:

He opened the filing cabinet and picked out the first card in the set.

Traditionally

, it read. Now there was a random choice between cards reading

coronations, engagements, funerals, weddings, comings of age, births, deaths

, or

the churching of women

. The day before he had picked

funerals

, and been directed on to a card reading with simple perfection

are occasions for mourning

. Today he closed his eyes, drew

weddings

, and was signposted on to

are occasions for rejoicing

.

The wedding of X and Y

followed in logical sequence, and brought him a choice between

is no exception

and

is a case in point

. Either way there followed

indeed

. Indeed, whichever occasion one had started off with, whether coronations, deaths, or births, Goldwasser saw with intense mathematical pleasure, one now reached this same elegant bottleneck. He paused on

indeed

, then drew in quick succession

it is a particularly happy occasion, rarely

, and

can there have been a more popular young couple

.

From the next selection, Goldwasser drew

X has won himself/herself a special place in the nation’s affections

, which forced him to go on to

and the British people have cleverly taken Y to their hearts already

.

Goldwasser was surprised, and a little disturbed, to realise that the word “fitting” had still not come up. But he drew it with the next card—

it is especially fitting that

.

This gave him

the bride/bridegroom should be

, and an open choice between

of such a noble and illustrious line, a commoner in these democratic times, from a nation with which this country has long enjoyed a particularly close and cordial relationship

, and

from a nation with which this country’s relations have not in the past been always happy

.

Feeling that he had done particularly well with “fitting” last time, Goldwasser now deliberately selected it again.

It is also fitting that

, read the card, to be quickly followed by

we should remember

, and

X and Y are not mere symbols—they are a lively young man and a very lovely young woman

.

Goldwasser shut his eyes to draw the next card. It turned out to read

in these days when

. He pondered whether to select

it is fashionable to scoff at the traditional morality of marriage and family life

or

it is no longer fashionable to scoff at the traditional morality of marriage and family life

. The latter had more of the form’s authentic baroque splendor, he decided.

Let’s call this a word-chain device (the technical name is a “finite-state” or “Markov” model). A word-chain device is a bunch of lists of words (or prefabricated phrases) and a set of directors for going from list to list. A processor builds a sentence by selecting a word from one list, then a word from another list, and so on. (To recognize a sentence spoken by another person, one just checks the words against each list in order.) Word-chain systems are commonly used in satires like Frayn’s, usually as do-it-yourself recipes for composing examples of a kind of verbiage. For example, here is a Social Science Jargon Generator, which the reader may operate by picking a word at random from the first column, then a word from the second, then one from the third, and stringing them together to form an impressive-sounding term like

inductive aggregating interdependence

.

dialectical

defunctionalized

positivistic

predicative

multilateral

quantitative

divergent

synchronous

differentiated

inductive

integrated

distributive

participatory

degenerative

aggregating

appropriative

simulated

homogeneous

transfigurative

diversifying

cooperative

progressive

complementary

eliminative

interdependence

diffusion

periodicity

synthesis

sufficiency

equivalence

expectancy

plasticity

epigenesis

constructivism

deformation

solidification

Recently I saw a word-chain device that generates breathless book jacket blurbs, and another for Bob Dylan song lyrics.

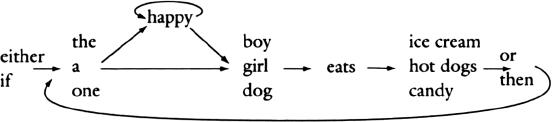

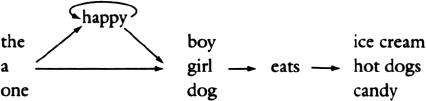

A word-chain device is the simplest example of a discrete combinatorial system, since it is capable of creating an unlimited number of distinct combinations from a finite set of elements. Parodies notwithstanding, a word-chain device can generate infinite sets of grammatical English sentences. For example, the extremely simple scheme

assembles many sentences, such as

A girl eats ice cream

and

The happy dog eats candy

. It can assemble an infinite number because of the loop at the top that can take the device from the

happy

list back to itself any number of times:

The happy dog eats ice cream, The happy happy dog eats ice cream

, and so on.

When an engineer has to build a system to combine words in particular orders, a word-chain device is the first thing that comes to mind. The recorded voice that gives you a phone number when you dial directory assistance is a good example. A human speaker is recorded uttering the ten digits, each in seven different sing-song patterns (one for the first position in a phone number, one for the second position, and so on). With just these seventy recordings, ten million phone numbers can be assembled; with another thirty recordings for three-digit area codes, ten billion numbers are possible (in practice, many are never used because of restrictions like the absence of 0 and 1 from the beginning of a phone number). In fact there have been serious efforts to model the English language as a very large word chain. To make it as realistic as possible, the transitions from one word list to another can reflect the actual probabilities that those kinds of words follow one another in English (for example, the word

that

is much more likely to be followed by

is

than by

indicates

). Huge databases of these “transition probabilities” have been compiled by having a computer analyze bodies of English text or by asking volunteers to name the words that first come to mind after a given word or series of words. Some psychologists have suggested that human language is based on a huge word chain stored in the brain. The idea is congenial to stimulus-response theories: a stimulus elicits a spoken word as a response, then the speaker perceives his or her own response, which serves as the next stimulus, eliciting one out of several words as the next response, and so on.

But the fact that word-chain devices seem ready-made for parodies like Frayn’s raises suspicions. The point of the various parodies is that the genre being satirized is so mindless and cliché-ridden that a simple mechanical method can churn out an unlimited number of examples that can almost pass for the real thing. The humor works because of the discrepancy between the two: we all assume that people, even sociologists and reporters, are not really word-chain devices; they only seem that way.

The modern study of grammar began when Chomsky showed that word-chain devices are not just a bit suspicious; they are deeply, fundamentally, the wrong way to think about how human language works. They are discrete combinatorial systems, but they are the wrong kind. There are three problems, and each one illuminates some aspect of how language really does work.

First, a sentence of English is a completely different thing from a string of words chained together according to the transition probabilities of English. Remember Chomsky’s sentence

Colorless green ideas sleep furiously

. He contrived it not only to show that nonsense can be grammatical but also to show that improbable word sequences can be grammatical. In English texts the probability that the word

colorless

is followed by the word

green

is surely zero. So is the probability that

green

is followed by

ideas, ideas

by

sleep

, and

sleep

by

furiously

. Nonetheless, the string is a well-formed sentence of English. Conversely, when one actually assembles word chains using probability tables, the resulting word strings are very far from being well-formed sentences. For example, say you take estimates of the set of words most likely to come after every four-word sequence, and use those estimates to grow a string word by word, always looking at the four most recent words to determine the next one. The string will be eerily Englishy, but not English, like

House to ask for is to earn out living by working towards a goal for his team in old New-York was a wonderful place wasn’t it even pleasant to talk about and laugh hard when he tells lies he should not tell me the reason why you are is evident

.

The discrepancy between English sentences and Englishy word chains has two lessons. When people learn a language, they are learning how to put words in order, but not by recording which word follows which other word. They do it by recording which word

category

—noun, verb, and so on—follows which other category. That is, we can recognize

colorless green ideas

because it has the same order of adjectives and nouns that we learned from more familiar sequences like

strapless black dresses

. The second lesson is that the nouns and verbs and adjectives are not just hitched end to end in one long chain; there is some overarching blueprint or plan for the sentence that puts each word in a specific slot.

If a word-chain device is designated with sufficient cleverness, it can deal with these problems. But Chomsky had a definitive refutation of the very idea that a human language is a word chain. He proved that certain sets of English sentences could not, even in principle, be produced by a word-chain device, no matter how big or how faithful to probability tables the device is. Consider sentences like the following:

Either the girl eats ice cream, or the girl eats candy.

If the girl eats ice cream, then the boy eats hot dogs.

At first glance it seems easy to accommodate these sentences: