The Information (28 page)

These were of two kinds: ordinary switches and the special switches called relays—the telegraph’s progeny. The relay was an electrical switch controlled by electricity (a looping idea). For the telegraph, the point was to reach across long distances by making a chain. For Shannon, the point was not distance but control. A hundred relays, intricately interconnected, switching on and off in particular sequence, coordinated the Differential Analyzer. The best experts on complex relay circuits were telephone engineers; relays controlled the routing of calls through telephone exchanges, as well as machinery on factory assembly lines. Relay circuitry was designed for each particular case. No one had thought to study the idea systematically, but Shannon was looking for a topic for his master’s thesis, and he saw a possibility. In his last year of college he had taken a course in symbolic logic, and, when he tried to make an orderly

list of the possible arrangements of switching circuits, he had a sudden feeling of déjà vu. In a deeply abstract way, these problems lined up. The peculiar artificial notation of symbolic logic, Boole’s “algebra,” could be used to describe circuits.

This was an odd connection to make. The worlds of electricity and logic seemed incongruous. Yet, as Shannon realized, what a relay passes onward from one circuit to the next is not really electricity but rather a fact: the fact of whether the circuit is open or closed. If a circuit is open, then a relay may cause the next circuit to open. But the reverse arrangement is also possible, the negative arrangement: when a circuit is open, a relay may cause the next circuit to close. It was clumsy to describe the possibilities with words; simpler to reduce them to symbols, and natural, for a mathematician, to manipulate the symbols in equations. (Charles Babbage had taken steps down the same path with his mechanical notation, though Shannon knew nothing of this.)

“A calculus is developed for manipulating these equations by simple mathematical processes”—with this clarion call, Shannon began his thesis in 1937. So far the equations just represented combinations of circuits. Then, “the calculus is shown to be exactly analogous to the calculus of propositions used in the symbolic study of logic.” Like Boole, Shannon showed that he needed only two numbers for his equations: zero and one. Zero represented a closed circuit; one represented an open circuit. On or off. Yes or no. True or false. Shannon pursued the consequences. He began with simple cases: two-switch circuits, in series or in parallel. Circuits in series, he noted, corresponded to the logical connective

and;

whereas circuits in parallel had the effect of

or

. An operation of logic that could be matched electrically was negation, converting a value into its opposite. As in logic, he saw that circuitry could make “if … then” choices. Before he was done, he had analyzed “star” and “mesh” networks of increasing complexity, by setting down postulates and theorems to handle systems of simultaneous equations. He followed this tower of abstraction with practical examples—inventions, on paper, some practical and some just quirky.

He diagrammed the design of an electric combination lock, to be made from five push-button switches. He laid out a circuit that would “automatically add two numbers, using only relays and switches”;

♦

for convenience, he suggested arithmetic using base two. “It is possible to perform complex mathematical operations by means of relay circuits,” he wrote. “In fact, any operation that can be completely described in a finite number of steps using the words

if

,

or

,

and

, etc. can be done automatically with relays.” As a topic for a student in electrical engineering this was unheard of: a typical thesis concerned refinements to electric motors or transmission lines. There was no practical call for a machine that could solve puzzles of logic, but it pointed to the future. Logic circuits. Binary arithmetic. Here in a master’s thesis by a research assistant was the essence of the computer revolution yet to come.

Shannon spent a summer working at the Bell Telephone Laboratories in New York City and then, at Vannevar Bush’s suggestion, switched from electrical engineering to mathematics at MIT. Bush also suggested that he look into the possibility of applying an algebra of symbols—his “queer algebra”

♦

—to the nascent science of genetics, whose basic elements, genes and chromosomes, were just dimly understood. So Shannon began work on an ambitious doctoral dissertation to be called “An Algebra for Theoretical Genetics.”

♦

Genes, as he noted, were a theoretical construct. They were thought to be carried in the rodlike bodies known as chromosomes, which could be seen under a microscope, but no one knew exactly how genes were structured or even if they were real. “Still,” as Shannon noted, “it is possible for our purposes to act as though they were.… We shall speak therefore as though the genes actually exist and as though our simple representation of hereditary phenomena were really true, since so far as we are concerned, this might just as well be so.” He devised an arrangement of letters and numbers to represent “genetic formulas” for an individual; for example, two chromosome pairs and four gene positions could be represented thus:

A

1

B

2

C

3

D

5

E

4

F

1

G

6

H

1A

3

B

1

C

4

D

3

E

4

F

2

G

6

H

2

Then, the processes of genetic combination and cross-breeding could be predicted by a calculus of additions and multiplications. It was a sort of road map, far abstracted from the messy biological reality. He explained: “To non-mathematicians we point out that it is a commonplace of modern algebra for symbols to represent concepts other than numbers.” The result was complex, original, and quite detached from anything people in the field were doing.

♦

♦

He never bothered to publish it.

Meanwhile, late in the winter of 1939, he wrote Bush a long letter about an idea closer to his heart:

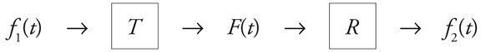

Off and on I have been working on an analysis of some of the fundamental properties of general systems for the transmission of intellegence, including telephony, radio, television, telegraphy, etc. Practically all systems of communication may be thrown into the following general form:

♦

T

and

R

were a transmitter and a receiver. They mediated three “functions of time,”

f

(

t

): the “intelligence to be transmitted,” the signal, and the final output, which, of course, was meant to be as nearly identical to the input as possible. (“In an ideal system it would be an exact replica.”) The problem, as Shannon saw it, was that real systems always suffer

distortion

—a term for which he proposed to give a rigorous definition in mathematical form. There was also

noise

(“e.g., static”). Shannon told Bush he was trying to prove some theorems. Also, and not incidentally, he was working on a machine for performing symbolic mathematical

operations, to do the work of the Differential Analyzer and more, entirely by means of electric circuits. He had far to go. “Although I have made some progress in various outskirts of the problem I am still pretty much in the woods, as far as actual results are concerned,” he said.

I have a set of circuits drawn up which actually will perform symbolic differentiation and integration on most functions, but the method is not quite general or natural enough to be perfectly satisfactory. Some of the general philosophy underlying the machine seems to evade me completely.

He was painfully thin, almost gaunt. His ears stuck out a little from his close-trimmed wavy hair. In the fall of 1939, at a party in the Garden Street apartment he shared with two roommates, he was standing shyly in his own doorway, a jazz record playing on the phonograph, when a young woman started throwing popcorn at him. She was Norma Levor, an adventurous nineteen-year-old Radcliffe student from New York. She had left school to live in Paris that summer but returned when Nazi Germany invaded Poland; even at home, the looming war had begun to unsettle people’s lives. Claude struck her as dark in temperament and sparkling in intellect. They began to see each other every day; he wrote sonnets for her, uncapitalized in the style of E. E. Cummings. She loved the way he loved words, the way he said

Boooooooolean

algebra. By January they were married (Boston judge, no ceremony), and she followed him to Princeton, where he had received a postdoctoral fellowship.

The invention of writing had catalyzed logic, by making it possible to reason about reasoning—to hold a train of thought up before the eyes for examination—and now, all these centuries later, logic was reanimated with the invention of machinery that could work upon symbols. In logic and mathematics, the highest forms of reasoning, everything seemed to be coming together.

By melding logic and mathematics in a system of axioms, signs, formulas, and proofs, philosophers seemed within reach of a kind of perfection—a rigorous, formal certainty. This was the goal of Bertrand Russell and Alfred North Whitehead, the giants of English rationalism, who published their great work in three volumes from 1910 to 1913. Their title,

Principia Mathematica

, grandly echoed Isaac Newton; their ambition was nothing less than the perfection of all mathematics. This was finally possible, they claimed, through the instrument of symbolic logic, with its obsidian signs and implacable rules. Their mission was to prove every mathematical fact. The process of proof, when carried out properly, should be mechanical. In contrast to words,

symbolism

(they declared) enables “perfectly precise expression.” This elusive quarry had been pursued by Boole, and before him, Babbage, and long before either of them, Leibniz, all believing that the perfection of reasoning could come with the perfect encoding of thought. Leibniz could only imagine it: “a certain script of language,” he wrote in 1678, “that perfectly represents the relationships between our thoughts.”

♦

With such encoding, logical falsehoods would be instantly exposed.

The characters would be quite different from what has been imagined up to now.… The characters of this script should serve invention and judgment as in algebra and arithmetic.… It will be impossible to write, using these characters, chimerical notions [

chimères

].

Russell and Whitehead explained that symbolism suits the “highly abstract processes and ideas”

♦

used in logic, with its trains of reasoning. Ordinary language works better for the muck and mire of the ordinary world. A statement like

a whale is big

uses simple words to express “a complicated fact,” they observed, whereas

one is a number

“leads, in language, to an intolerable prolixity.” Understanding whales, and bigness, requires knowledge and experience of real things, but to manage

1

, and

number

, and all their associated arithmetical operations, when properly expressed in desiccated symbols, should be automatic.

They had noticed some bumps along the way, though—some of the

chimères

that should have been impossible. “A very large part of the labour,” they said in their preface, “has been expended on the contradictions and paradoxes which have infected logic.” “Infected” was a strong word but barely adequate to express the agony of the paradoxes. They were a cancer.

Some had been known since ancient times:

Epimenides the Cretan said that all Cretans were liars, and all other statements made by Cretans were certainly lies. Was this a lie?

♦

A cleaner formulation of Epimenides’ paradox—cleaner because one need not worry about Cretans and their attributes—is the liar’s paradox:

This statement is false

. The statement cannot be true, because then it is false. It cannot be false, because then it becomes true. It is neither true nor false, or it is both at once. But the discovery of this twisting, backfiring, mind-bending circularity does not bring life or language crashing to a halt—one grasps the idea and moves on—because life and language lack the perfection, the absolutes, that give them force. In real life, all Cretans cannot be liars. Even liars often tell the truth. The pain begins only with the attempt to build an airtight vessel. Russell and Whitehead aimed for perfection—for proof—otherwise the enterprise had little point. The more rigorously they built, the more paradoxes they found. “It was in the air,” Douglas Hofstadter has written, “that truly peculiar things could happen when modern cousins of various ancient paradoxes cropped up inside the rigorously logical world of numbers,… a pristine paradise in which no one had dreamt paradox might arise.”

♦