The Numbers Behind NUMB3RS (17 page)

Read The Numbers Behind NUMB3RS Online

Authors: Keith Devlin

WHAT KEEPS YOUR PASSWORD SAFE?

Even with message encryption, activities such as online banking still have vulnerabilities. One obvious potential weak point is your password. By transmitting your password in encrypted form, an eavesdropper could not obtain it; but if an enemy were able to hack into the computer on which your bank stores its customers' passwords (which it has to do in order to check your attempted login), he or she would immediately have free access to your account. To prevent this happening, your bank does not store your password; rather it stores what is called a

hashed

version.

Hashing is a particular kind of process that takes an input string (such as your password) and generates a new string of a particular size. (It's not strictly speaking an encryption process since it may be impossible to undo the hash.) When you try to log on to your bank account, the bank's computer compares the hashed version of the password you type in with the entry stored in its hashed-passwords file. To make this system work, the hashing function, H, has to have two fairly obvious properties:

- For any input string x, it should be easy to compute H(x).

- Given any hash value y, it should be computationally infeasible to find an x such that H(x) = y.

(“Computationally infeasible” means it would take the fastest computers more than, say, a human lifetime to carry out the procedure to completion.)

By requirement 2, even if a hacker gained access to the stored login information, he or she would not be able to obtain your password (though without additional controls they would of course be able to access your account on that machine, since it's the hashed version that the receiving server uses for authorization.)

In practice, the people who design hash functions usually demand an additional uniformity feature that facilitates efficient storage of the hashed values of identification information and makes for a faster and easier database-lookup procedure to determine identity:

3. All values produced by H have the same bit-length.

Â

Because of this third condition, in theory there will be many different input strings that produce the same output; in the parlance of the hashing community, there will be “collisions,” distinct input strings x and y such that H(x) = H(y). Because access to secure sites is determined (at the site) by examining the incoming hashed login data, one possible weakness of the system is that illegal access to an account does not require that the intruder obtain the account holder's login identity and password; it is sufficient to find

some

input data that generates the same hashed valueâthat is, to find an input that collides with the legitimate data. In designing an algorithm for a hash function, it is therefore clearly important to make sure that this is extremely unlikely to occur. That gives a fourth requirement:

4. It is a practical impossibility (it is “computationally infeasible”) to find a string y that collides with a given string x, that is, for which H(x) = H(y).

Typically, hash functions work by combining (in some systematic way) the bits of the input string (e.g., your login details) with other bits chosen at random, and performing some complex, iterative distillation process that reduces the resulting string down to one of a fixed length (predetermined for the system).

There are dozens of different hash functions in use. The two most widely used are MD5 (“Message Digest algorithm 5”), developed by Ronald Rivest (he of RSA) at MIT in 1991 as one of a series of hash algorithms he designed, and SHAâ1 (“Secure Hash Algorithm 1”) developed by the National Security Agency in 1995. MD5 produces a hash value of 128 bits, and it would take on average 2

64

guesses to find a collision. SHAâ1 generates a hash string of length 160 bits, and it would require an average of 2

80

guesses to find a collision. In theory, both methods would seem to offer a high degree of securityâprovided that the only feasible way to find a collision is by trial and error.

Unfortunately for the digital security world, trial and error is not the only way to make a dent in a hashing system such as SHAâ1. During the late 1990s and early 2000s, Xiaoyun Wang, a mathematician at Tsinghua University in Beijing, showed that with ingenuity and a lot of hard work, it was possible to find collisions for some widely used hashing functions. At the Crypto '04 conference in Santa Barbara in 2004, Wang astonished the attendants with her announcement that she had found a way to find a collision for MD5 in just 2

37

inputs, a staggering reduction in problem size that made MD5 highly vulnerable.

Wang's approach was to input to the algorithm strings that differ by just a few bits and look closely at what happens to them, step by step, as the algorithm operates on them. This led her to develop a “feel” for the kinds of strings that will result in a collision, allowing her to gradually narrow down the possibilities, resulting eventually in her developing a procedure to generate a collision.

Following the announcement at Crypto '04, Wang, together with her colleagues Hongbo Yu and Yiqun Lisa Yin, started work on the crown jewel of current hash functions, SHAâ1. This proved a much harder nut to crack, but to the general dismay (and admiration) of the computer security community, at the annual RSA security conference in San Francisco in February 2005, they were able to announce that they had developed an algorithm that could generate two SHAâ1 colliding files in just 2

69

steps.

Wang and her colleagues have not yet cracked SHAâ1; they have just produced a method that

could

crack it in far fewer steps than was previously believed possible. That number 2

69

is still sufficiently high to provide some degree of confidence in the system's securityâfor now. So too is the even lower number of 2

63

steps that Wang and other collaborators managed to achieve in the months following the February 2005 announcement. But many in the cryptographic community now believe that the writing is on the wall, and that, as a result of Wang's work, advances in computing speed and power will rapidly render useless all the hashing algorithms currently in use. It won't happen todayâexperts assure us that our ATM transactions are secure for now. But soon. Commenting on the development to

New Scientist

magazine, Burt Kaliski, the head of RSA Laboratories in Bedford, Massachusetts, declared, “This is a crisis for the research community.” Mark Zimmerman, a cryptographer with ICSA Labs in Mechanicsburg, Pennsylvania, put it rather more colorfully: “It's not Armageddon, but it's a good kick in the pants.”

9

How Reliable Is the Evidence?

Doubts about Fingerprints

THE WRONG GUY?

When Don arrives on the scene he finds that the murderer had garroted his victim. It's not a common method, but it reminds Don of a murder committed a year earlier. On that occasion, the FBI's investigation was very successful. After both eyewitness testimony from a police lineup and a fingerprint match identified a man named Carl Howard as the murderer, Howard confessed to the crime, accepted a plea bargain, and went to prison. But the similarities of that earlier murder to the new one are so striking that Don begins to wonder whether they got the wrong guy when they sent Howard to prison. As Charlie helps Don with the investigation of suspects in the new murder, they speculate about the possibility that Howard was an innocent man sent to prison for a crime he did not commit.

This is the story that viewers watched unfold in the first-season episode of

NUMB3RS

called “Identity Crisis,” broadcast on April 1, 2005.

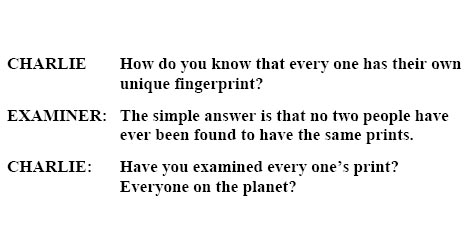

A key piece of evidence that sent Howard to prison was a fingerprint from the murder sceneâmore accurately, part of a thumbprint. The FBI fingerprint examiner was certain of the correctness of her identification of Howard as the source of the crime-scene partial print, which led first Howard's lawyer and then Howard himself to conclude that accepting a plea bargain was the only sensible thing to do. But once Howard's possible innocence is being considered, Charlie, the mathematician trained to think logically and to demand scientific proof for scientific claims, engages the fingerprint examiner in a discussion:

The match the examiner made was based on what is called a “partial,” a latent fingerprint consisting of ridge marks from only part of the tip of a single finger. So Charlie continues his questioning, asking how often just

a part of a single finger's print

from one person looks like that of another person. The examiner says she doesn't know, prompting Charlie to press her further.

As usual, Charlie is right on the ball. These days, fingerprint evidence, once regarded as so infallible that forensic scientists would never consider challenging its certainty, is under increasing attack and critical scrutiny in courts across the United States and many other parts of the world.

THE MYTH OF FINGERPRINTS

The twentieth century's most stunning forensic success is probably the establishment of fingerprint identification as the “gold standard” for scientific evidence in criminal prosecutions. Its acceptance as a virtually unchallengeable “clincher” in the courtroom is shown by the terminology often applied to its only current rival, DNA evidence, which is often referred to as “genetic fingerprinting.”

When it first appeared, fingerprinting was not immediately seized upon as the magical key to resolving questions about the identification of criminals. It took decades in the United States and Europe to dislodge its predecessor, the Bertillon system.

Invented by a Parisian police clerk in the late nineteenth century, the Bertillon system relied primarily on an elaborate set of eleven carefully recorded anatomical measurementsâthe length and width of the head, length of the left middle finger, the distance from the left elbow to the tip of the left middle finger, and so on. That system had proved a great success in foiling the attempts of repeat offenders to avoid harsher sentences by passing themselves off under a succession of aliases.

Like Bertillonage, fingerprinting proved to be a reliable method of “verification.” A police department could compare a high-quality set of ten fingerprints obtained from “Alphonse Parker,” now in custody, with a file of “full sets” of ten fingerprints from previous offenders and perhaps identify Parker as “Frederick McPhee” from his last incarceration. Even more stunning was the possibility of “lifting” fingerprints from surfacesâa table, a window, a glassâat the scene of a crime and using these “latent prints” to individualize the identification of the perpetrator. That is, by searching through a file of cards containing known exemplars, full-set fingerprints of known individuals, investigators could sometimes obtain a match with crime-scene fingerprints and thereby identify the perpetrator. Or they could bring in a suspect, fingerprint him, and compare those prints with the ones lifted from the crime scene. Even though latent fingerprints are often of low qualityâsmudged, partial (involving only a portion of the tip of the finger), incomplete (involving only one or two fingers, say)âan experienced and skilled fingerprint examiner could still possibly observe enough commonality with an exemplar print set to make a positive identification with enough certainty to offer testimony in court.

Because the chances of a crime-scene investigation yielding accurate measurements of the perpetrator's head-width and the like are all but zero, the advantage of fingerprinting over Bertillonage for investigative work soon became clear. Even as it was being replaced by fingerprinting, however, the Bertillon system was recognized as having one clear advantage of its own: the indexing system that was developed to go with it. Bertillon relied on numerical values for standardized measurements; accordingly, searches of a large card file to determine a possible match with the measurements of a person in custody could be performed in a straightforward way. fingerprint matching relied on human judgment in side-by-side comparison of the distinguishing features of two prints or sets of prints, which did not lend itself to the same kind of numerically driven efficiency.

With the advent of computers in the mid-twentieth century, however, it became possible to code sets of fingerprints numerically in such a way that a computer could quickly eliminate the great majority of potential matches and narrow the search to a small subset of a large file, so that human examiners could be used for the final individualizationâa possible matching of a suspect print with a single exemplar. Indeed, after September 11, 2001, the United States government accelerated efforts to develop rapid computer-assisted methods to compare quickly fingerprint scans of individuals attempting to enter the country against computer databases of fingerprint features of known or suspected terrorists. These computer-assisted methods, known to fingerprint experts as “semi-lights-out systems,” rely heavily upon numerically coded summaries of key features of an individual's fingerprints. Exploiting these features makes it possible to offer a human expert, whose final judgment is considered a necessity, at most a handful of exemplars to check for a match.

For prosecution of criminals, the element of human expertise has proved to be critical. fingerprint examiners, working for agencies such as the FBI or police departments, have varying levels of training and competence, but their presentations in court invariably rest on two pillars:

- The claim that fingerprints are literally unique: No two people, not even identical twins, have ever been found to have identical fingerprints.

- The certainty of the examiner: With “100 percent confidence” (or words to that effect), he or she is certain that the match between the crime-scene prints and the examplar prints of the defendant is correct; they are fingerprints of the same person.

HOW DOES AN EXPERT “MATCH” FINGERPRINTS?

There is no completely specified protocol for matching fingerprints, but experts generally mark up the pictures of the prints in a way something like this:

Crime scene print

Single finger from exemplar

Every skilled and experienced examiner uses a variety of comparisons between prints to make a match. To their credit, they subscribe to an admirably sound principle, the one dissimilarity doctrine, which says that if any difference between the prints is found that cannot be accounted for or explainedâsay, by a smudge or speck of dirtâthen a potential match must be rejected.

The most common testimony relies, however, on the determination of certain features called minutiaeâliterally, points on the prints where ridgelines end or split in two. These are sometimes called Galton points, in homage to Sir Francis Galton, the pioneering English statistician, whose 1892 book

finger Prints

established the basic methods for comparing these points on two prints to make an identification. Unfortunately for the practice of fingerprint forensics, no standard has been establishedâat least in American practiceâfor the minimum number of points of commonality needed to determine a reliable match. Many a defense lawyer or judge has been frustrated by the lack of any standardization of the number of points: Is twelve a sufficient number? Is eight enough? In Australia and France, the minimum number is twelve. In Italy it is sixteen. In the United States, rules of thumb (no pun intended) vary from state to state, even from police department to police department. Essentially, the position of fingerprint experts in court seems to have been “I generally require at least X points,” where X is never larger than the number in the present case.

FINGERPRINT EXPERTS VERSUS THE LIKES OF CHARLIE EPPES

In recent years there has been a growing chorus of opposition to the courts' formerly routine acceptance of the automatic certainty of matches claimed by fingerprint expert witnesses. Like Charlie Eppes, a number of mathematicians, statisticians, other scientists, and distinguished lawyersâeven some judgesâhave complained in court and in public about the lack of standards for fingerprint evidence, the performance certification of expert examiners, and, most important of all, the lack of scientifically controlled validation studies of fingerprint matchingâthat is, the lack of any basis for determining the frequency of errors.

Referring to an acronym for the usual methods of fingerprint identification, ACE-V, a federal judge commented:

*

The court further finds that, while the ACE-V methodology appears to be amenable to testing, such testing has not yet been performed.